The documentation section provides a comprehensive guide to using Solo to launch a Hiero Consensus Node network, including setup instructions, usage guides, and information for developers. It covers everything from installation to advanced features and troubleshooting.

This is the multi-page printable view of this section. Click here to print.

Documentation

- 1: Getting Started

- 2: Solo User Guide

- 3: Advanced Network Deployments

- 4: Solo CLI User Manual

- 5: Updated CLI Command Mappings

- 6: Solo CLI Commands

- 7: FAQ

- 8: Troubleshooting

- 9: Using Solo with Mirror Node

- 10: Using Solo with Hiero JavaScript SDK

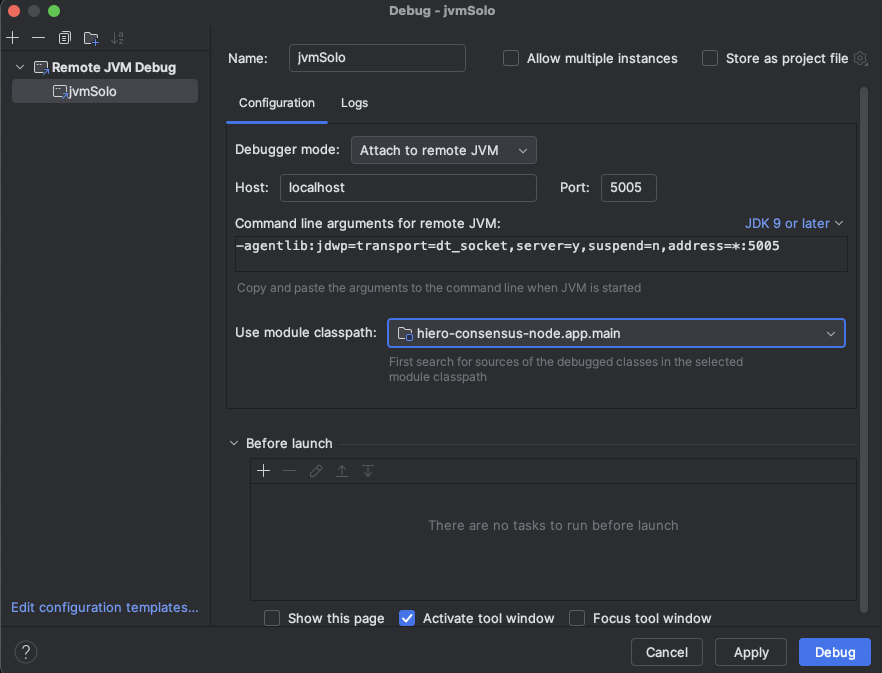

- 11: Hiero Consensus Node Platform Developer

- 12: Hiero Consensus Node Execution Developer

- 13: Attach JVM Debugger and Retrieve Logs

- 14: Using Network Load Generator with Solo

- 15: Using Environment Variables

- 16: Solo CI Workflow

- 17:

1 - Getting Started

Solo

An opinionated CLI tool to deploy and manage standalone test networks.

Releases

Solo releases are supported for one month after their release date. Upgrade to the latest version to benefit from new features and improvements. Every quarter a version is designated as LTS (Long-Term Support) and supported for three months.

Current Releases

| Solo Version | Node.js | Kind | Solo Chart | Hedera | Kubernetes | Kubectl | Helm | k9s | Docker Resources | Release Date | End of Support |

|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.56.0 (LTS) | >= 22.0.0 (lts/jod) | >= v0.29.0 | v0.60.2 | v0.68.7-rc.1 | >= v1.32.2 | >= v1.32.2 | v3.14.2 | >= v0.27.4 | Memory >= 12GB, CPU cores >= 6 | 2026-02-12 | 2026-05-12 |

| 0.55.0 | >= 22.0.0 (lts/jod) | >= v0.29.0 | v0.60.2 | v0.68.7-rc.1 | >= v1.32.2 | >= v1.32.2 | v3.14.2 | >= v0.27.4 | Memory >= 12GB, CPU cores >= 6 | 2026-02-05 | 2026-03-05 |

| 0.54.0 (LTS) | >= 22.0.0 (lts/jod) | >= v0.29.0 | v0.59.0 | v0.68.6+ | >= v1.32.2 | >= v1.32.2 | v3.14.2 | >= v0.27.4 | Memory >= 12GB, CPU cores >= 6 | 2026-01-27 | 2026-04-27 |

| 0.53.0 | >= 22.0.0 (lts/jod) | >= v0.29.0 | v0.58.1 | v0.67.2+ | >= v1.32.2 | >= v1.27.3 | v3.14.2 | >= v0.27.4 | Memory >= 12GB, CPU cores >= 6 | 2026-01-15 | 2026-02-15 |

| 0.52.0 (LTS) | >= 22.0.0 (lts/jod) | >= v0.26.0 | v0.58.1 | v0.67.2+ | >= v1.27.3 | >= v1.27.3 | v3.14.2 | >= v0.27.4 | Memory >= 12GB, CPU cores >= 6 | 2025-12-11 | 2026-03-11 |

| 0.50.0 (LTS) | >= 22.0.0 (lts/jod) | >= v0.26.0 | v0.57.0 | v0.66.0+ | >= v1.27.3 | >= v1.27.3 | v3.14.2 | >= v0.27.4 | Memory >= 12GB, CPU cores >= 6 | 2025-11-13 | 2026-02-13 |

To see a list of legacy releases, please check the legacy versions documentation page.

Hardware Requirements

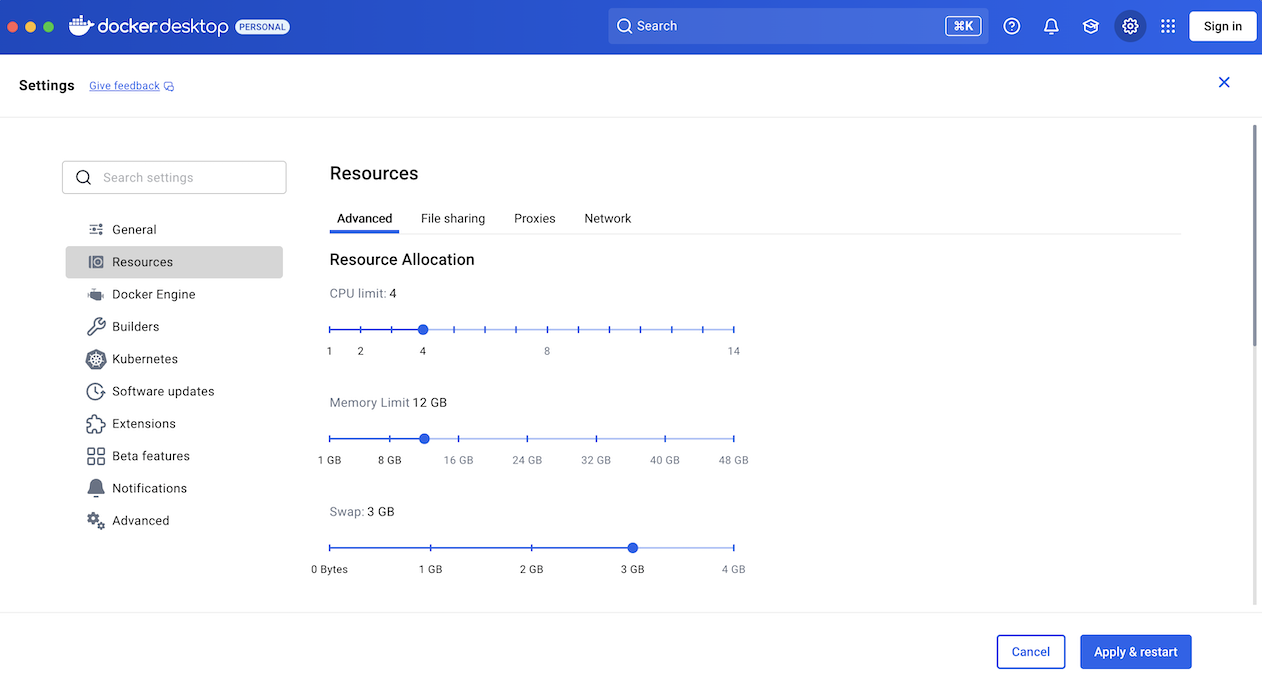

Docker Desktop (or Docker Engine / Podman on Linux) with at least 12GB of memory and 6 CPU cores.

Installation

Install Solo via Homebrew (macOS, Linux, WSL2):

brew install hiero-ledger/tools/solo

Or via npm (requires Node.js >= 22.0.0):

npm install -g @hashgraph/solo@latest

For detailed platform-specific instructions, see the Solo User Guide.

Documentation

If you have installed solo we recommend starting your docs journey at the one-shot network deployment command you can find here: solo docs)

Contributing

Contributions are welcome. Please see the contributing guide to see how you can get involved.

Code of Conduct

This project is governed by the Contributor Covenant Code of Conduct. By participating, you are expected to uphold this code of conduct.

License

2 - Solo User Guide

Introduction

Welcome to the world of Hiero development! If you’re looking to build and test applications on the Hiero network but don’t want to spend HBAR on testnet or mainnet transactions, you’ve come to the right place. Solo is your gateway to running your own local Hiero test network, giving you complete control over your development environment.

Solo is an opinionated command-line interface (CLI) tool designed to deploy and manage standalone Hiero test networks. Think of it as your personal Hiero sandbox where you can experiment, test features, and develop applications without any external dependencies or costs.

By the end of this tutorial, you’ll have your own Hiero test network running locally, complete with consensus nodes, mirror nodes, and all the infrastructure needed to submit transactions and test your applications.

System Requirements

First, check that your computer meets these minimum specifications (for a single-node network):

- Memory: At least 12 GB (16 GB recommended for smoother performance)

- CPU: Minimum 6 cores (8 cores recommended)

- Storage: At least 20 GB of free disk space

- Operating System: macOS, Linux, or Windows with WSL2

Installation

Choose your platform below:

# 1. Install Homebrew (if not already installed)

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

# 2. Install Docker Desktop

# Download from: https://www.docker.com/products/docker-desktop

# Start Docker Desktop and allocate at least 12 GB of memory:

# Docker Desktop > Settings > Resources > Memory

# 3. Remove existing npm based installs

<!--lint ignore no-undefined-references-->

[[ "$(command -v npm >/dev/null 2>&1 && echo 0 || echo 1)" -eq 0 ]] && { npm uninstall -g @hashgraph/solo >/dev/null 2>&1 || /bin/true }

# 4. Install Solo (this installs all other dependencies automatically)

brew tap hiero-ledger/tools

brew update

brew install solo

# Verify the installation

solo --version# 1. Install Homebrew for Linux

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

# Add Homebrew to your PATH

echo 'eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"' >> ~/.bashrc

eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"

# 2. Install Docker Engine

# For Ubuntu/Debian:

sudo apt-get update

sudo apt-get install -y docker.io

sudo systemctl enable docker

sudo systemctl start docker

sudo usermod -aG docker ${USER}

# Log out and back in for group changes to take effect

# 3. Install kubectl

sudo apt update && sudo apt install -y ca-certificates curl

ARCH="$(dpkg --print-architecture)"

curl -fsSLo kubectl "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/${ARCH}/kubectl"

chmod +x kubectl

sudo mv kubectl /usr/local/bin/kubectl

# 4. Remove existing npm based installs

<!--lint ignore no-undefined-references-->

[[ "$(command -v npm >/dev/null 2>&1 && echo 0 || echo 1)" -eq 0 ]] && { npm uninstall -g @hashgraph/solo >/dev/null 2>&1 || /bin/true }

# 5. Install Solo (this installs all other dependencies automatically)

brew tap hiero-ledger/tools

brew update

brew install solo

# 6. Install Solo (this installs remaining dependencies automatically)

brew install hiero-ledger/tools/solo

# Verify the installation

solo --version# First, in Windows PowerShell (as Administrator):

# wsl --install Ubuntu

# Then reboot and open the Ubuntu terminal.

# All commands below run in your Ubuntu (WSL2) terminal.

# 1. Install Homebrew for Linux

/bin/bash -c "$(curl -fsSL https://raw.githubusercontent.com/Homebrew/install/HEAD/install.sh)"

# Add Homebrew to your PATH

echo 'eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"' >> ~/.bashrc

eval "$(/home/linuxbrew/.linuxbrew/bin/brew shellenv)"

# 2. Install Docker Desktop for Windows

# Download from: https://www.docker.com/products/docker-desktop

# Enable WSL2 integration: Docker Desktop > Settings > Resources > WSL Integration

# Allocate at least 12 GB of memory: Docker Desktop > Settings > Resources

# 3. Install kubectl

sudo apt update && sudo apt install -y ca-certificates curl

ARCH="$(dpkg --print-architecture)"

curl -fsSLo kubectl "https://dl.k8s.io/release/$(curl -L -s https://dl.k8s.io/release/stable.txt)/bin/linux/${ARCH}/kubectl"

chmod +x kubectl

sudo mv kubectl /usr/local/bin/kubectl

# 4. Remove existing npm based installs

<!--lint ignore no-undefined-references-->

[[ "$(command -v npm >/dev/null 2>&1 && echo 0 || echo 1)" -eq 0 ]] && { npm uninstall -g @hashgraph/solo >/dev/null 2>&1 || /bin/true }

# 5. Install Solo (this installs all other dependencies automatically)

brew tap hiero-ledger/tools

brew update

brew install solo

# 6. Install Solo (this installs remaining dependencies automatically)

brew install hiero-ledger/tools/solo

# Verify the installation

solo --version

# IMPORTANT: Always run Solo commands from this WSL2 terminal.Alternative: Install via NPM (for contributors/advanced users)

If you need more control over dependencies or are contributing to Solo development:

# Requires Node.js >= 22.0.0 and Kind to be installed separately

npm install -g @hashgraph/solo

See the Development Guide for complete contributor setup instructions.

Troubleshooting Installation

⚠️ Having trouble? Try cleaning up first

If you’re experiencing issues installing or upgrading Solo (e.g., conflicts with a previous installation), you may need to clean up your environment first.

⚠️ Warning: The commands below will delete Solo-managed Kind clusters and remove your Solo home directory (

~/.solo).

# Delete only Solo-managed Kind clusters (names starting with "solo")

kind get clusters | grep '^solo' | while read cluster; do

kind delete cluster -n "$cluster"

done

# Remove Solo configuration and cache

rm -rf ~/.solo

After cleaning up, retry the installation with brew install hiero-ledger/tools/solo.

Deploying Your Network

With Solo installed, deploying a complete Hiero test network takes just one command:

solo one-shot single deploy

That’s it! This single command automatically:

- Creates a local Kubernetes cluster

- Sets up all required configurations

- Deploys a consensus node

- Deploys a mirror node with explorer UI

- Deploys a JSON RPC relay

- Configures port-forwarding so you can access services immediately

- Generates cryptographic keys

- Creates test accounts

The deployment takes a few minutes. When complete, your network is ready to use.

What Gets Deployed

| Component | Description |

|---|---|

| Consensus Node | Hiero consensus node for processing transactions |

| Mirror Node | Stores and serves historical data |

| Explorer UI | Web interface for viewing accounts and transactions |

| JSON RPC Relay | Ethereum-compatible JSON RPC interface |

Multiple Node Deployment

For testing consensus scenarios (click to expand)

For testing consensus scenarios or multi-node behavior, you can deploy multiple consensus nodes by specifying the --num-consensus-nodes flag:

solo one-shot single deploy --num-consensus-nodes 3

This deploys 3 consensus nodes along with the same components as the single-node setup (mirror node, explorer, relay).

📝 Note: Multiple node deployments require more resources. Ensure you have at least 16 GB of memory and 8 CPU cores allocated to Docker.

When finished:

solo one-shot single destroy

Working with Your Network

Network Endpoints

After deployment, your network services are automatically available at:

| Service | Endpoint | Description |

|---|---|---|

| Explorer UI | http://localhost:8080 | Web UI for inspecting network |

| Consensus Node | localhost:50211 | gRPC endpoint for transactions |

| Mirror Node REST | http://localhost:5551 | REST API for queries |

| JSON RPC Relay | localhost:7546 | Ethereum-compatible JSON RPC |

Open http://localhost:8080 in your browser to explore your network.

Check Pod Status

To verify all components are running:

kubectl get pods -A | grep -v kube-system

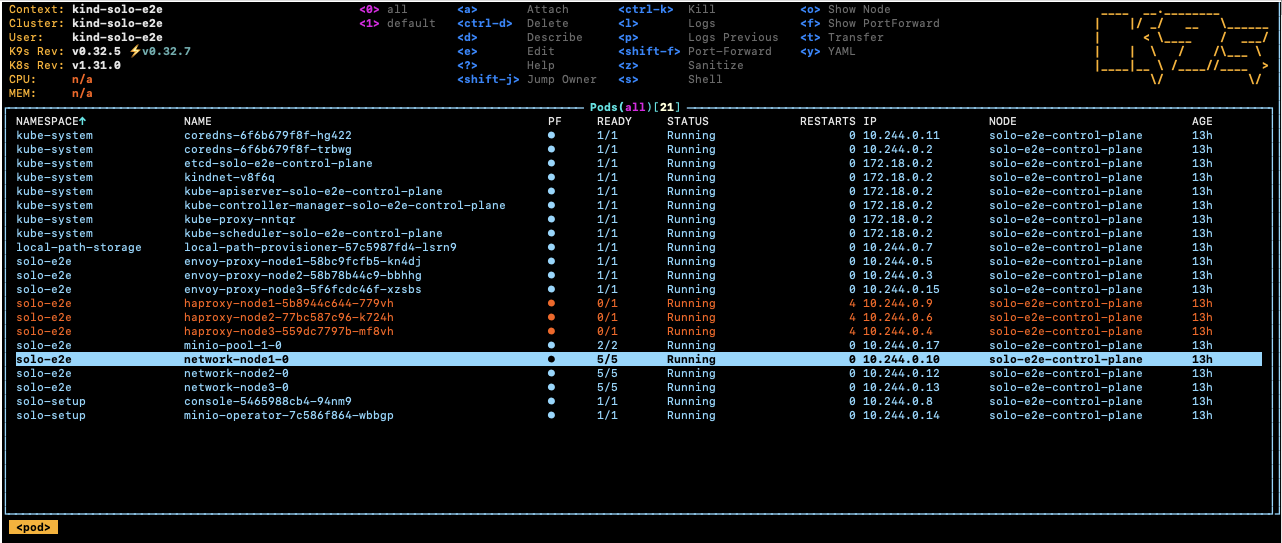

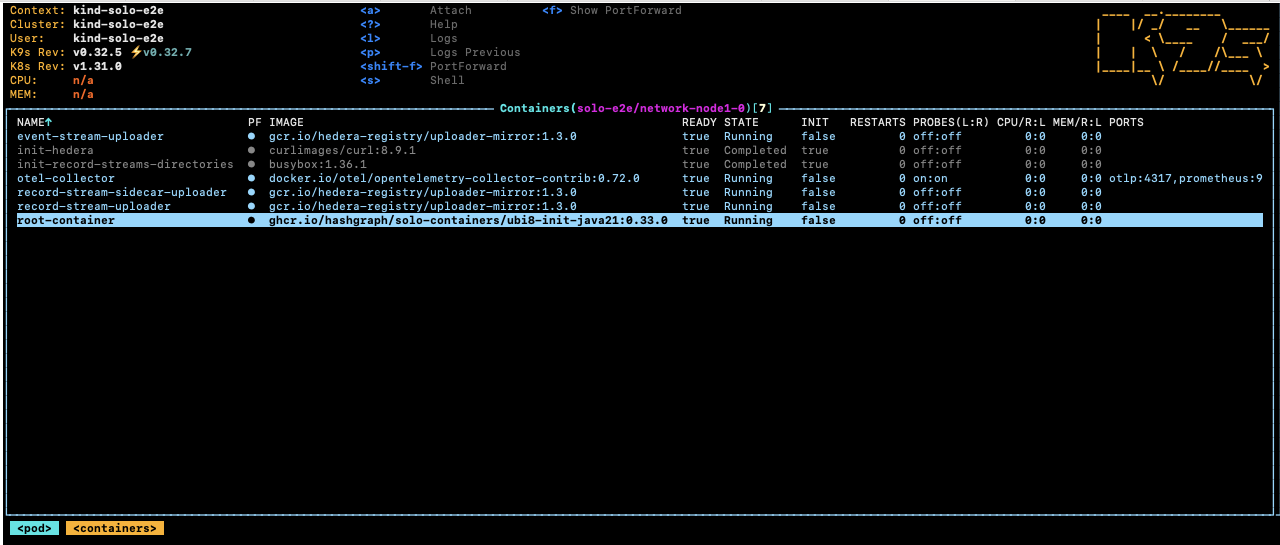

💡 Tip: The Solo testing team recommends k9s for managing Kubernetes clusters. It provides a terminal-based UI that makes it easy to view pods, logs, and cluster status. Install it with

brew install k9sand runk9sto launch.

Managing Your Network

Stopping and Starting Nodes

First, find your deployment name (shown during deployment or in ~/.solo/cache/last-one-shot-deployment.txt):

cat ~/.solo/cache/last-one-shot-deployment.txt

Then use it in management commands:

# Stop all nodes

solo consensus node stop --deployment <deployment-name>

# Start nodes again

solo consensus node start --deployment <deployment-name>

# Restart nodes

solo consensus node restart --deployment <deployment-name>

Viewing Logs

Capture logs and diagnostic information:

solo deployment diagnostics all --deployment <deployment-name>

Logs are saved to ~/.solo/logs/. You can also use kubectl logs directly:

kubectl logs -n <namespace> <pod-name>

Updating the Network

To update nodes to a new Hiero version:

solo consensus network upgrade --deployment <deployment-name> --upgrade-version v0.66.0

Cleanup

Destroying Your Network

🚨 Important: Always destroy your network properly before deploying a new one!

Skipping this step is one of the most common causes of deployment failures. Solo stores state about your deployment, and deploying a new network without destroying the old one first leads to conflicts and errors.

To remove your Solo network:

solo one-shot single destroy

This command:

- Removes all deployed pods and services

- Cleans up the Kubernetes namespace

- Deletes the Kind cluster

- Updates Solo’s internal state

Always run destroy before deploying a new network.

A Note on Resource Usage

Solo deploys a fully functioning mirror node that stores the transaction history generated by your local test network. During active testing, the mirror node’s resource consumption will grow as it processes more transactions. If you notice increasing resource usage, destroying and redeploying the network with the commands above gives you a clean slate.

Full Reset

If solo one-shot single destroy fails or you need to recover from a corrupted state:

# Delete only Solo-managed Kind clusters (names starting with "solo")

kind get clusters | grep '^solo' | while read cluster; do

kind delete cluster -n "$cluster"

done

# Remove Solo configuration

rm -rf ~/.solo

⚠️ Warning: Routinely deleting clusters between test runs is inefficient and unnecessary. Use

solo one-shot single destroyfor normal teardown. The full reset above should only be used when the standard destroy command fails. Avoid usingkind get clusterswithout thegrepfilter — that would delete every Kind cluster on your machine, including any unrelated to Solo.

For additional troubleshooting steps, see the Troubleshooting Guide.

Next Steps

Congratulations! You now have a working Hiero test network. Here’s what to explore next:

Using Solo with Hiero JavaScript SDK - Create accounts, topics, and submit transactions using the SDK.

Mirror Node Queries - Learn how to query the mirror node REST API at

http://localhost:5551.Advanced Network Deployments - Deploy networks with custom configurations using Falcon, manual step-by-step deployment, or add/delete nodes dynamically.

Examples - Explore example configurations for various deployment scenarios.

FAQ - Common questions and answers about Solo.

If you run into issues, check the Troubleshooting Guide for solutions to common problems.

3 - Advanced Network Deployments

This guide covers advanced deployment scenarios for users who need more control over their Solo network configuration.

Prerequisites

Before using advanced deployment options, ensure you have completed the Solo User Guide and have:

- Solo installed (

solo --version) - Docker running with adequate resources

- kubectl configured

- A Kind cluster created

Set up your environment variables if not already done:

export SOLO_CLUSTER_NAME=solo

export SOLO_NAMESPACE=solo

export SOLO_CLUSTER_SETUP_NAMESPACE=solo-cluster

export SOLO_DEPLOYMENT=solo-deployment

Falcon Deployment

Falcon deployment provides fine-grained control over all network components through a YAML configuration file. This is ideal for CI/CD pipelines, automated testing, and complex deployment scenarios.

Basic Falcon Deployment

solo one-shot falcon deploy --values-file falcon-values.yaml

Example Configuration File

Create a file named falcon-values.yaml:

network:

--deployment: "my-network"

--release-tag: "v0.65.0"

--node-aliases: "node1"

setup:

--release-tag: "v0.65.0"

--node-aliases: "node1"

consensusNode:

--deployment: "my-network"

--node-aliases: "node1"

--force-port-forward: true

mirrorNode:

--enable-ingress: true

--pinger: true

explorerNode:

--enable-ingress: true

relayNode:

--node-aliases: "node1"

Multi-Node Falcon Configuration

For multiple consensus nodes:

network:

--deployment: "my-multi-network"

--release-tag: "v0.65.0"

--node-aliases: "node1,node2,node3"

setup:

--release-tag: "v0.65.0"

--node-aliases: "node1,node2,node3"

consensusNode:

--deployment: "my-multi-network"

--node-aliases: "node1,node2,node3"

--force-port-forward: true

mirrorNode:

--enable-ingress: true

--pinger: true

explorerNode:

--enable-ingress: true

relayNode:

--node-aliases: "node1"

Falcon with Block Node

Note: Block Node is experimental and requires at least 16 GB of memory allocated to Docker.

network:

--deployment: "block-node-network"

--release-tag: "v0.62.6"

--node-aliases: "node1"

setup:

--release-tag: "v0.62.6"

--node-aliases: "node1"

consensusNode:

--deployment: "block-node-network"

--node-aliases: "node1"

--force-port-forward: true

blockNode:

--deployment: "block-node-network"

--release-tag: "v0.62.6"

mirrorNode:

--enable-ingress: true

--pinger: true

explorerNode:

--enable-ingress: true

relayNode:

--node-aliases: "node1"

Tearing Down Falcon Deployment

solo one-shot falcon destroy

See the Falcon example for a complete configuration template.

Step-by-Step Manual Deployment

For maximum control, you can deploy each component individually. This is useful for debugging, custom configurations, or when you need to modify specific deployment steps.

1. Connect Cluster and Create Deployment

# Connect to the Kind cluster

solo cluster-ref config connect --cluster-ref kind-${SOLO_CLUSTER_NAME} --context kind-${SOLO_CLUSTER_NAME}

# Create a new deployment

solo deployment config create -n "${SOLO_NAMESPACE}" --deployment "${SOLO_DEPLOYMENT}"

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : cluster-ref config connect --cluster-ref kind-solo --context kind-solo

**********************************************************************************

Initialize

✔ Initialize

Validating cluster ref:

✔ Validating cluster ref: kind-solo

Test connection to cluster:

✔ Test connection to cluster: kind-solo

Associate a context with a cluster reference:

✔ Associate a context with a cluster reference: kind-solo

solo-deployment_CREATE_OUTPUT

2. Add Cluster to Deployment

Specify the number of consensus nodes:

# For a single node

solo deployment cluster attach --deployment "${SOLO_DEPLOYMENT}" --cluster-ref kind-${SOLO_CLUSTER_NAME} --num-consensus-nodes 1

# For multiple nodes (e.g., 3 nodes)

# solo deployment cluster attach --deployment "${SOLO_DEPLOYMENT}" --cluster-ref kind-${SOLO_CLUSTER_NAME} --num-consensus-nodes 3

Example output:

solo-deployment_ADD_CLUSTER_OUTPUT

3. Generate Keys

solo keys consensus generate --gossip-keys --tls-keys --deployment "${SOLO_DEPLOYMENT}"

PEM key files are generated in ~/.solo/cache/keys/.

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : keys consensus generate --gossip-keys --tls-keys --deployment solo-deployment

**********************************************************************************

Initialize

✔ Initialize

Generate gossip keys

Backup old files

✔ Backup old files

Gossip key for node: node1

✔ Gossip key for node: node1 [0.2s]

✔ Generate gossip keys [0.2s]

Generate gRPC TLS Keys

Backup old files

TLS key for node: node1

✔ Backup old files

✔ TLS key for node: node1 [0.5s]

✔ Generate gRPC TLS Keys [0.5s]

Finalize

✔ Finalize

4. Set Up Cluster with Shared Components

solo cluster-ref config setup -s "${SOLO_CLUSTER_SETUP_NAMESPACE}"

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : cluster-ref config setup --cluster-setup-namespace solo-cluster

**********************************************************************************

Check dependencies

Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependencies

Setup chart manager

✔ Setup chart manager

Initialize

✔ Initialize

Install cluster charts

Skipping Grafana Agent chart installation

Install pod-monitor-role ClusterRole

⏭️ ClusterRole pod-monitor-role already exists in context kind-solo, skipping

✔ Install pod-monitor-role ClusterRole

Install MinIO Operator chart

✅ MinIO Operator chart installed successfully on context kind-solo

✔ Install MinIO Operator chart [0.6s]

✔ Install cluster charts [0.6s]

5. Deploy the Network

solo consensus network deploy --deployment "${SOLO_DEPLOYMENT}"

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : consensus network deploy --deployment solo-deployment --release-tag v0.66.0

**********************************************************************************

Check dependencies

Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependencies

Setup chart manager

✔ Setup chart manager

Initialize

Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize [0.2s]

Copy gRPC TLS Certificates

Copy gRPC TLS Certificates [SKIPPED: Copy gRPC TLS Certificates]

Prepare staging directory

Copy Gossip keys to staging

✔ Copy Gossip keys to staging

Copy gRPC TLS keys to staging

✔ Copy gRPC TLS keys to staging

✔ Prepare staging directory

Copy node keys to secrets

Copy TLS keys

Node: node1, cluster: kind-solo

Copy Gossip keys

✔ Copy Gossip keys

✔ Node: node1, cluster: kind-solo

✔ Copy TLS keys

✔ Copy node keys to secrets

Install monitoring CRDs

Pod Logs CRDs

✔ Pod Logs CRDs

Prometheus Operator CRDs

- Installed prometheus-operator-crds chart, version: 24.0.2

✔ Prometheus Operator CRDs [3s]

✔ Install monitoring CRDs [3s]

Install chart 'solo-deployment'

- Installed solo-deployment chart, version: 0.60.2

✔ Install chart 'solo-deployment' [2s]

Check for load balancer

Check for load balancer [SKIPPED: Check for load balancer]

Redeploy chart with external IP address config

Redeploy chart with external IP address config [SKIPPED: Redeploy chart with external IP address config]

Check node pods are running

Check Node: node1, Cluster: kind-solo

✔ Check Node: node1, Cluster: kind-solo [26s]

✔ Check node pods are running [26s]

Check proxy pods are running

Check HAProxy for: node1, cluster: kind-solo

Check Envoy Proxy for: node1, cluster: kind-solo

✔ Check HAProxy for: node1, cluster: kind-solo

✔ Check Envoy Proxy for: node1, cluster: kind-solo

✔ Check proxy pods are running

Check auxiliary pods are ready

Check MinIO

✔ Check MinIO

✔ Check auxiliary pods are ready

Add node and proxies to remote config

✔ Add node and proxies to remote config

Copy block-nodes.json

✔ Copy block-nodes.json [1s]

6. Set Up Consensus Nodes

export CONSENSUS_NODE_VERSION=v0.66.0

solo consensus node setup --deployment "${SOLO_DEPLOYMENT}" --release-tag "${CONSENSUS_NODE_VERSION}"

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : consensus node setup --deployment solo-deployment --release-tag v0.66.0

**********************************************************************************

Load configuration

✔ Load configuration [0.2s]

Initialize

✔ Initialize [0.2s]

Validate nodes states

Validating state for node node1

✔ Validating state for node node1 - valid state: requested

✔ Validate nodes states

Identify network pods

Check network pod: node1

✔ Check network pod: node1

✔ Identify network pods

Fetch platform software into network nodes

Update node: node1 [ platformVersion = v0.66.0, context = kind-solo ]

✔ Update node: node1 [ platformVersion = v0.66.0, context = kind-solo ] [4s]

✔ Fetch platform software into network nodes [4s]

Setup network nodes

Node: node1

Copy configuration files

✔ Copy configuration files [0.5s]

Set file permissions

✔ Set file permissions [0.6s]

✔ Node: node1 [1s]

✔ Setup network nodes [1s]

setup network node folders

✔ setup network node folders [0.1s]

Change node state to configured in remote config

✔ Change node state to configured in remote config

7. Start Consensus Nodes

solo consensus node start --deployment "${SOLO_DEPLOYMENT}"

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : consensus node start --deployment solo-deployment

**********************************************************************************

Check dependencies

Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependencies

Load configuration

✔ Load configuration [0.2s]

Initialize

✔ Initialize [0.2s]

Validate nodes states

Validating state for node node1

✔ Validating state for node node1 - valid state: configured

✔ Validate nodes states

Identify existing network nodes

Check network pod: node1

✔ Check network pod: node1

✔ Identify existing network nodes

Upload state files network nodes

Upload state files network nodes [SKIPPED: Upload state files network nodes]

Starting nodes

Start node: node1

✔ Start node: node1 [0.4s]

✔ Starting nodes [0.4s]

Enable port forwarding for debug port and/or GRPC port

Using requested port 50211

✔ Enable port forwarding for debug port and/or GRPC port

Check all nodes are ACTIVE

Check network pod: node1

✔ Check network pod: node1 - status ACTIVE, attempt: 17/300 [22s]

✔ Check all nodes are ACTIVE [22s]

Check node proxies are ACTIVE

Check proxy for node: node1

✔ Check proxy for node: node1 [8s]

✔ Check node proxies are ACTIVE [8s]

set gRPC Web endpoint

Using requested port 30212

✔ set gRPC Web endpoint [3s]

Change node state to started in remote config

✔ Change node state to started in remote config

Add node stakes

Adding stake for node: node1

✔ Adding stake for node: node1 [4s]

✔ Add node stakes [4s]

Stopping port-forwarder for port [30212]

8. Deploy Mirror Node

solo mirror node add --deployment "${SOLO_DEPLOYMENT}" --cluster-ref kind-${SOLO_CLUSTER_NAME} --enable-ingress --pinger

The --pinger flag ensures record files are imported regularly.

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : mirror node add --deployment solo-deployment --cluster-ref kind-solo --enable-ingress --quiet-mode

**********************************************************************************

Check dependencies

Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependencies

Setup chart manager

✔ Setup chart manager

Initialize

Using requested port 30212

Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize [1s]

Enable mirror-node

Prepare address book

✔ Prepare address book

Install mirror ingress controller

- Installed haproxy-ingress-1 chart, version: 0.14.5

✔ Install mirror ingress controller [0.6s]

Deploy mirror-node

- Installed mirror chart, version: v0.146.0

✔ Deploy mirror-node [2s]

✔ Enable mirror-node [3s]

Check pods are ready

Check Postgres DB

Check REST API

Check GRPC

Check Monitor

Check Web3

Check Importer

✔ Check Postgres DB [31m[44s]

✔ Check Web3 [31m[56s]

✔ Check GRPC [31m[1m8s]

✔ Check Monitor [31m[1m28s]

✔ Check REST API [31m[1m36s]

✔ Check Importer [31m[2m2s]

✔ Check pods are ready [31m[2m2s]

Seed DB data

Insert data in public.file_data

✔ Insert data in public.file_data [0.4s]

✔ Seed DB data [0.4s]

Add mirror node to remote config

✔ Add mirror node to remote config

Enable port forwarding for mirror ingress controller

Using requested port 8081

✔ Enable port forwarding for mirror ingress controller

Stopping port-forwarder for port [30212]

9. Deploy Explorer

solo explorer node add --deployment "${SOLO_DEPLOYMENT}" --cluster-ref kind-${SOLO_CLUSTER_NAME}

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : explorer node add --deployment solo-deployment --cluster-ref kind-solo --quiet-mode

**********************************************************************************

Check dependencies

Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependencies

Setup chart manager

✔ Setup chart manager

Initialize

Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize [0.4s]

Load remote config

✔ Load remote config [0.1s]

Install cert manager

Install cert manager [SKIPPED: Install cert manager]

Install explorer

- Installed hiero-explorer-1 chart, version: 26.0.0

✔ Install explorer [0.7s]

Install explorer ingress controller

Install explorer ingress controller [SKIPPED: Install explorer ingress controller]

Check explorer pod is ready

✔ Check explorer pod is ready [14s]

Check haproxy ingress controller pod is ready

Check haproxy ingress controller pod is ready [SKIPPED: Check haproxy ingress controller pod is ready]

Add explorer to remote config

✔ Add explorer to remote config

Enable port forwarding for explorer

No port forward config found for Explorer

Using requested port 8080

✔ Enable port forwarding for explorer [0.1s]

10. Deploy JSON RPC Relay

solo relay node add -i node1 --deployment "${SOLO_DEPLOYMENT}"

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : relay node add --node-aliases node1 --deployment solo-deployment --cluster-ref kind-solo

**********************************************************************************

Check dependencies

Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependencies

Setup chart manager

✔ Setup chart manager

Initialize

Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize [0.5s]

Check chart is installed

✔ Check chart is installed [0.1s]

Prepare chart values

Using requested port 30212

✔ Prepare chart values [1s]

Deploy JSON RPC Relay

- Installed relay-1 chart, version: 0.73.0

✔ Deploy JSON RPC Relay [31m[40s]

Check relay is running

✔ Check relay is running

Check relay is ready

✔ Check relay is ready [0.1s]

Add relay component in remote config

✔ Add relay component in remote config

Enable port forwarding for relay node

Using requested port 7546

✔ Enable port forwarding for relay node [0.1s]

Stopping port-forwarder for port [30212]

Deploying Block Node (Experimental)

Warning: Block Node requires at least 16 GB of memory and Consensus Node version v0.62.3 or higher.

Block Node must be deployed before the network:

# Deploy Block Node first

solo block node add --deployment "${SOLO_DEPLOYMENT}" --cluster-ref kind-"${SOLO_CLUSTER_NAME}" --release-tag v0.62.6

# Then deploy the network with the matching version

solo consensus network deploy --deployment "${SOLO_DEPLOYMENT}"

solo consensus node setup --deployment "${SOLO_DEPLOYMENT}" --release-tag v0.62.6

solo consensus node start --deployment "${SOLO_DEPLOYMENT}"

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : block node add --deployment solo-deployment --cluster-ref kind-solo --release-tag v0.66.0

**********************************************************************************

Check dependencies

Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependencies

Setup chart manager

✔ Setup chart manager

Initialize

Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize

Prepare release name and block node name

✔ Prepare release name and block node name

Prepare chart values

✔ Prepare chart values

Deploy block node

- Installed block-node-1 chart, version: 0.26.2

✔ Deploy block node [1s]

Check block node pod is running

✔ Check block node pod is running [12s]

Check software

✔ Check software

Check block node pod is ready

✔ Check block node pod is ready [31m[41s]

Check block node readiness

✔ Check block node readiness - [1/100] success [0.2s]

Add block node component in remote config

✔ Add block node component in remote config

Update consensus nodes

Update consensus nodes in remote config

✔ Update consensus nodes in remote config

✔ Update consensus nodes

To destroy Block Node (must be done before network destruction):

solo block node destroy --deployment "${SOLO_DEPLOYMENT}" --cluster-ref kind-${SOLO_CLUSTER_NAME}

Connecting to a Remote Cluster

Solo can deploy to any Kubernetes cluster, not just local Kind clusters.

Setting Up Remote Cluster Connection

# View available contexts

kubectl config get-contexts

# Switch to your remote cluster context

kubectl config use-context <context-name>

# Connect Solo to the remote cluster

solo cluster-ref config connect --cluster-ref <cluster-ref-name> --context <context-name>

Remote Cluster Requirements

- Kubernetes 1.24 or higher

- Sufficient resources for network components

- Network access to pull container images

- Storage class available for persistent volumes

Adding Nodes to an Existing Network

You can dynamically add new consensus nodes to a running network.

Quick Add (When Available)

# TODO: solo consensus node add (coming soon)

Step-by-Step Node Addition

For precise control over the node addition process:

# Prepare the new node

solo consensus dev-node-add prepare \

--gossip-keys true \

--tls-keys true \

--deployment "${SOLO_DEPLOYMENT}" \

--pvcs true \

--admin-key <admin-key> \

--node-alias node2 \

--output-dir context

# Submit the transaction to add the node

solo consensus dev-node-add submit-transaction \

--deployment "${SOLO_DEPLOYMENT}" \

--input-dir context

# Execute the node addition

solo consensus dev-node-add execute \

--deployment "${SOLO_DEPLOYMENT}" \

--input-dir context

See the node-create-transaction example for a complete walkthrough.

Deleting Nodes from a Network

You can dynamically remove consensus nodes from a running network.

Quick Delete (When Available)

# TODO: solo consensus node destroy (coming soon)

Step-by-Step Node Deletion

For precise control over the node deletion process:

# Prepare the node for deletion

solo consensus dev-node-delete prepare \

--deployment "${SOLO_DEPLOYMENT}" \

--node-alias node2 \

--output-dir context

# Submit the transaction to delete the node

solo consensus dev-node-delete submit-transaction \

--deployment "${SOLO_DEPLOYMENT}" \

--input-dir context

# Execute the node deletion

solo consensus dev-node-delete execute \

--deployment "${SOLO_DEPLOYMENT}" \

--input-dir context

See the node-delete-transaction example for a complete walkthrough.

Step-by-Step Node Update

For testing the update process or granular control:

# Prepare the update

solo consensus dev-node-update prepare \

--deployment "${SOLO_DEPLOYMENT}" \

--node-alias node1 \

--release-tag v0.66.0 \

--output-dir context

# Submit the update transaction

solo consensus dev-node-update submit-transaction \

--deployment "${SOLO_DEPLOYMENT}" \

--input-dir context

# Execute the update

solo consensus dev-node-update execute \

--deployment "${SOLO_DEPLOYMENT}" \

--input-dir context

See the node-update-transaction example for a complete walkthrough.

Complete Cleanup for Manual Deployments

When using manual deployment, clean up in reverse order:

# 1. Destroy relay node

solo relay node destroy -i node1 --deployment "${SOLO_DEPLOYMENT}" --cluster-ref kind-${SOLO_CLUSTER_NAME}

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : relay node destroy --node-aliases node1 --deployment solo-deployment --cluster-ref kind-solo

**********************************************************************************

Check dependencies

Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependencies

Setup chart manager

✔ Setup chart manager

Initialize

Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize [0.7s]

Destroy JSON RPC Relay

*** Destroyed Relays ***

-------------------------------------------------------------------------------

- block-node-1 [block-node-server-0.26.2]

- haproxy-ingress-1 [haproxy-ingress-0.14.5]

- hiero-explorer-1 [hiero-explorer-chart-26.0.0]

- mirror-1 [hedera-mirror-0.146.0]

- prometheus-operator-crds [prometheus-operator-crds-24.0.2]

- solo-deployment [solo-deployment-0.60.2]

✔ Destroy JSON RPC Relay [0.5s]

Remove relay component from remote config

✔ Remove relay component from remote config

# 2. Destroy mirror node

solo mirror node destroy --deployment "${SOLO_DEPLOYMENT}" --force

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : mirror node destroy --deployment solo-deployment --quiet-mode

**********************************************************************************

Check dependencies

Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependencies

Setup chart manager

✔ Setup chart manager

Initialize

Using requested port 30212

Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize [1s]

Destroy mirror-node

✔ Destroy mirror-node [0.5s]

Delete PVCs

✔ Delete PVCs

Uninstall mirror ingress controller

✔ Uninstall mirror ingress controller [0.3s]

Remove mirror node from remote config

✔ Remove mirror node from remote config

Stopping port-forwarder for port [30212]

# 3. Destroy explorer node

solo explorer node destroy --deployment "${SOLO_DEPLOYMENT}" --force

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : explorer node destroy --deployment solo-deployment --quiet-mode

**********************************************************************************

Check dependencies

Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependencies

Setup chart manager

✔ Setup chart manager

Initialize

Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10 [0.1s]

✔ Initialize [0.9s]

Load remote config

✔ Load remote config [0.3s]

Destroy explorer

✔ Destroy explorer [1s]

Uninstall explorer ingress controller

✔ Uninstall explorer ingress controller [0.2s]

Remove explorer from remote config

✔ Remove explorer from remote config

# 4. Destroy block node (if deployed) - BEFORE network destruction

solo block node destroy --deployment "${SOLO_DEPLOYMENT}" --cluster-ref kind-${SOLO_CLUSTER_NAME}

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : block node destroy --deployment solo-deployment --cluster-ref kind-solo

**********************************************************************************

Check dependencies

Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64] [0.1s]

✔ Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64] [0.1s]

✔ Check dependencies [0.1s]

Setup chart manager

✔ Setup chart manager [0.1s]

Initialize

Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize [0.8s]

Destroy block node

✔ Destroy block node [1s]

Disable block node component in remote config

✔ Disable block node component in remote config

Rebuild 'block.nodes.json' for consensus nodes

✔ Rebuild 'block.nodes.json' for consensus nodes [1s]

# 5. Destroy the network

solo consensus network destroy --deployment "${SOLO_DEPLOYMENT}" --force

Example output:

******************************* Solo *********************************************

Version : 0.56.0

Kubernetes Context : kind-solo

Kubernetes Cluster : kind-solo

Current Command : consensus network destroy --deployment solo-deployment --quiet-mode

**********************************************************************************

Check dependencies

Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: helm [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependency: kubectl [OS: linux, Release: 6.8.0-94-generic, Arch: x64]

✔ Check dependencies

Setup chart manager

✔ Setup chart manager

Initialize

Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize

Running sub-tasks to destroy network

✔ Deleting the RemoteConfig configmap in namespace solo [0.8s]

Additional Examples

Explore more deployment scenarios in the Examples section:

4 - Solo CLI User Manual

Solo Command Line User Manual

Solo has a series of commands to use, and some commands have subcommands. User can get help information by running with the following methods:

solo --help will return the help information for the solo command to show which commands

are available.

Version Information

Check the Solo version using:

solo --version

For machine-readable output formats (Kubernetes ecosystem standard), use the --output or -o flag:

solo --version -o json # JSON format: {"version": "0.46.1"}

solo --version -o yaml # YAML format: version: 0.46.1

solo --version -o wide # Plain text: 0.46.1

The --output flag can also be used with other Solo commands to suppress banners and produce machine-readable output, making it ideal for scripts and CI/CD pipelines.

solo command --help will return the help information for the specific command to show which options

solo ledger account --help

Manage Hedera accounts in solo network

Commands:

system init Initialize system accounts with new keys

account create Creates a new account with a new key and stores the key in th

e Kubernetes secrets, if you supply no key one will be genera

ted for you, otherwise you may supply either a ECDSA or ED255

19 private key

account update Updates an existing account with the provided info, if you wa

nt to update the private key, you can supply either ECDSA or

ED25519 but not both

account get Gets the account info including the current amount of HBAR

Options:

--dev Enable developer mode [boolean]

--force-port-forward Force port forward to access the network services

[boolean]

-h, --help Show help [boolean]

-v, --version Show version number [boolean]

solo command subcommand --help will return the help information for the specific subcommand to show which options

solo ledger account create --help

Creates a new account with a new key and stores the key in the Kubernetes secret

s, if you supply no key one will be generated for you, otherwise you may supply

either a ECDSA or ED25519 private key

Options:

--dev Enable developer mode [boolean]

--force-port-forward Force port forward to access the network services

[boolean]

--hbar-amount Amount of HBAR to add [number]

--create-amount Amount of new account to create [number]

--ecdsa-private-key ECDSA private key for the Hedera account [string]

-d, --deployment The name the user will reference locally to link to

a deployment [string]

--ed25519-private-key ED25519 private key for the Hedera account [string]

--generate-ecdsa-key Generate ECDSA private key for the Hedera account

[boolean]

--set-alias Sets the alias for the Hedera account when it is cr

eated, requires --ecdsa-private-key [boolean]

-c, --cluster-ref The cluster reference that will be used for referen

cing the Kubernetes cluster and stored in the local

and remote configuration for the deployment. For

commands that take multiple clusters they can be se

parated by commas. [string]

-h, --help Show help [boolean]

-v, --version Show version number [boolean]

For more information see: Solo CLI Commands

5 - Updated CLI Command Mappings

Updated CLI Command Mappings

The following tables provide a complete mapping of previous (< v0.44.0) CLI commands to their updated three-level structure. Entries marked as No changes retain their original form.

Init

| Old Command | New Command |

|---|---|

| init | No changes |

Block node

| Old Command | New Command |

|---|---|

| block node add | No changes |

| block node destroy | No changes |

| block node upgrade | No changes |

Account

| Old Command | New Command |

|---|---|

| account init | ledger system init |

| account update | ledger account update |

| account create | ledger account create |

| account get | ledger account info |

One Shot

| Old Command | New Command |

|---|---|

| quick-start single deploy | one-shot single deploy |

| quick-start single destroy | one-shot single destroy |

Cluster Reference

| Old Command | New Command |

|---|---|

| cluster-ref connect | cluster-ref config connect |

| cluster-ref disconnect | cluster-ref config disconnect |

| cluster-ref list | cluster-ref config list |

| cluster-ref info | cluster-ref config info |

| cluster-ref setup | cluster-ref config setup |

| cluster-ref reset | cluster-ref config reset |

Deployment

| Old Command | New Command |

|---|---|

| deployment add-cluster | deployment cluster attach |

| deployment list | deployment config list |

| deployment create | deployment config create |

| deployment delete | deployment config destroy |

Explorer

| Old Command | New Command |

|---|---|

| explorer deploy | explorer node add |

| explorer destroy | explorer node destroy |

Mirror Node

| Old Command | New Command |

|---|---|

| mirror-node deploy | mirror node add |

| mirror-node destroy | mirror node destroy |

Relay

| Old Command | New Command |

|---|---|

| relay deploy | relay node add |

| relay destroy | relay node destroy |

Network

| Old Command | New Command |

|---|---|

| network deploy | consensus network deploy |

| network destroy | consensus network destroy |

Node

| Old Command | New Command |

|---|---|

| node keys | keys consensus generate |

| node freeze | consensus network freeze |

| node upgrade | consensus network upgrade |

| node setup | consensus node setup |

| node start | consensus node start |

| node stop | consensus node stop |

| node upgrade | consensus node upgrade |

| node restart | consensus node restart |

| node refresh | consensus node refresh |

| node add | consensus node add |

| node update | consensus node update |

| node delete | consensus node destroy |

| node add-prepare | consensus dev-node-add prepare |

| node add-submit-transaction | consensus dev-node-add submit-transaction |

| node add-execute | consensus dev-node-add execute |

| node update-prepare | consensus dev-node-update prepare |

| node update-submit-transaction | consensus dev-node-update submit-transaction |

| node update-execute | consensus dev-node-update execute |

| node upgrade-prepare | consensus dev-node-upgrade prepare |

| node upgrade-submit-transaction | consensus dev-node-upgrade submit-transaction |

| node upgrade-execute | consensus dev-node-upgrade execute |

| node delete-prepare | consensus dev-node-delete prepare |

| node delete-submit-transaction | consensus dev-node-delete submit-transaction |

| node delete-execute | consensus dev-node-delete execute |

| node prepare-upgrade | consensus dev-freeze prepare-upgrade |

| node freeze-upgrade | consensus dev-freeze freeze-upgrade |

| node download-generated-files | consensus diagnostic configs |

| node logs | deployment diagnostics logs |

| node states | consensus state download |

6 - Solo CLI Commands

Solo Command Reference

Table of Contents

Root Help Output

Usage:

solo <command> [options]

Commands:

init Initialize local environment

config Backup and restore component configurations for Solo deployments. These commands display what would be backed up or restored without performing actual operations.

block Block Node operations for creating, modifying, and destroying resources. These commands require the presence of an existing deployment.

cluster-ref Manages the relationship between Kubernetes context names and Solo cluster references which are an alias for a kubernetes context.

consensus Consensus Node operations for creating, modifying, and destroying resources. These commands require the presence of an existing deployment.

deployment Create, modify, and delete deployment configurations. Deployments are required for most of the other commands.

explorer Explorer Node operations for creating, modifying, and destroying resources.These commands require the presence of an existing deployment.

keys Consensus key generation operations

ledger System, Account, and Crypto ledger-based management operations. These commands require an operational set of consensus nodes and may require an operational mirror node.

mirror Mirror Node operations for creating, modifying, and destroying resources. These commands require the presence of an existing deployment.

relay RPC Relay Node operations for creating, modifying, and destroying resources. These commands require the presence of an existing deployment.

one-shot One Shot commands for new and returning users who need a preset environment type. These commands use reasonable defaults to provide a single command out of box experience.

rapid-fire Commands for performing load tests a Solo deployment

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

init

init

Initialize local environment

Options:

--cache-dir Local cache directory [string] [default: "/home/runner/.solo/cache"]

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-u, --user Optional user name used for [string]

local configuration. Only

accepts letters and numbers.

Defaults to the username

provided by the OS

-v, --version Show version number [boolean]

config

config

Backup and restore component configurations for Solo deployments. These commands display what would be backed up or restored without performing actual operations.

Commands:

config ops Configuration backup and restore operations

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

config ops

config ops

Configuration backup and restore operations

Commands:

config ops backup Display backup plan for all component configurations of a deployment. Shows what files and configurations would be backed up without performing the actual backup.

config ops restore-config Restore component configurations from backup. Imports ConfigMaps, Secrets, logs, and state files for a running deployment.

config ops restore-clusters Restore Kind clusters from backup directory structure. Creates clusters, sets up Docker network, installs MetalLB, and initializes cluster configurations. Does not deploy network components.

config ops restore-network Deploy network components to existing clusters from backup. Deploys consensus nodes, block nodes, mirror nodes, explorers, and relay nodes. Requires clusters to be already created (use restore-clusters first).

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

block

block

Block Node operations for creating, modifying, and destroying resources. These commands require the presence of an existing deployment.

Commands:

block node Create, manage, or destroy block node instances. Operates on a single block node instance at a time.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

block node

block node

Create, manage, or destroy block node instances. Operates on a single block node instance at a time.

Commands:

block node add Creates and configures a new block node instance for the specified deployment using the specified Kubernetes cluster. The cluster must be accessible and attached to the specified deployment.

block node destroy Destroys a single block node instance in the specified deployment. Requires access to all Kubernetes clusters attached to the deployment.

block node upgrade Upgrades a single block node instance in the specified deployment. Requires access to all Kubernetes clusters attached to the deployment.

block node add-external Add an external block node for the specified deployment. You can specify the priority and consensus nodes to which to connect or use the default settings.

block node delete-external Deletes an external block node from the specified deployment.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

cluster-ref

cluster-ref

Manages the relationship between Kubernetes context names and Solo cluster references which are an alias for a kubernetes context.

Commands:

cluster-ref config List, create, manage, and remove associations between Kubernetes contexts and Solo cluster references.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

cluster-ref config

cluster-ref config

List, create, manage, and remove associations between Kubernetes contexts and Solo cluster references.

Commands:

cluster-ref config connect Creates a new internal Solo cluster name to a Kubernetes context or maps a Kubernetes context to an existing internal Solo cluster reference

cluster-ref config disconnect Removes the Kubernetes context associated with an internal Solo cluster reference.

cluster-ref config list Lists the configured Kubernetes context to Solo cluster reference mappings.

cluster-ref config info Displays the status information and attached deployments for a given Solo cluster reference mapping.

cluster-ref config setup Setup cluster with shared components

cluster-ref config reset Uninstall shared components from cluster

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

consensus

consensus

Consensus Node operations for creating, modifying, and destroying resources. These commands require the presence of an existing deployment.

Commands:

consensus network Ledger/network wide consensus operations such as freeze, upgrade, and deploy. Operates on the entire ledger and all consensus node instances.

consensus node List, create, manage, or destroy consensus node instances. Operates on a single consensus node instance at a time.

consensus state List, download, and upload consensus node state backups to/from individual consensus node instances.

consensus dev-node-add Dev operations for adding consensus nodes.

consensus dev-node-update Dev operations for updating consensus nodes

consensus dev-node-upgrade Dev operations for upgrading consensus nodes

consensus dev-node-delete Dev operations for delete consensus nodes

consensus dev-freeze Dev operations for freezing consensus nodes

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

consensus network

consensus network

Ledger/network wide consensus operations such as freeze, upgrade, and deploy. Operates on the entire ledger and all consensus node instances.

Commands:

consensus network deploy Installs and configures all consensus nodes for the deployment.

consensus network destroy Removes all consensus network components from the deployment.

consensus network freeze Initiates a network freeze for scheduled maintenance or upgrades

consensus network upgrade Upgrades the software version running on all consensus nodes.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

consensus node

consensus node

List, create, manage, or destroy consensus node instances. Operates on a single consensus node instance at a time.

Commands:

consensus node setup Setup node with a specific version of Hedera platform

consensus node start Start a node

consensus node stop Stop a node

consensus node restart Restart all nodes of the network

consensus node refresh Reset and restart a node

consensus node add Adds a node with a specific version of Hedera platform

consensus node update Update a node with a specific version of Hedera platform

consensus node destroy Delete a node with a specific version of Hedera platform

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

consensus state

consensus state

List, download, and upload consensus node state backups to/from individual consensus node instances.

Commands:

consensus state download Downloads a signed state from consensus node/nodes.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

consensus dev-node-add

consensus dev-node-add

Dev operations for adding consensus nodes.

Commands:

consensus dev-node-add prepare Prepares the addition of a node with a specific version of Hedera platform

consensus dev-node-add submit-transactions Submits NodeCreateTransaction and Upgrade transactions to the network nodes

consensus dev-node-add execute Executes the addition of a previously prepared node

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

consensus dev-node-update

consensus dev-node-update

Dev operations for updating consensus nodes

Commands:

consensus dev-node-update prepare Prepare the deployment to update a node with a specific version of Hedera platform

consensus dev-node-update submit-transactions Submit transactions for updating a node with a specific version of Hedera platform

consensus dev-node-update execute Executes the updating of a node with a specific version of Hedera platform

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

consensus dev-node-upgrade

consensus dev-node-upgrade

Dev operations for upgrading consensus nodes

Commands:

consensus dev-node-upgrade prepare Prepare for upgrading network

consensus dev-node-upgrade submit-transactions Submit transactions for upgrading network

consensus dev-node-upgrade execute Executes the upgrading the network

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

consensus dev-node-delete

consensus dev-node-delete

Dev operations for delete consensus nodes

Commands:

consensus dev-node-delete prepare Prepares the deletion of a node with a specific version of Hedera platform

consensus dev-node-delete submit-transactions Submits transactions to the network nodes for deleting a node

consensus dev-node-delete execute Executes the deletion of a previously prepared node

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

consensus dev-freeze

consensus dev-freeze

Dev operations for freezing consensus nodes

Commands:

consensus dev-freeze prepare-upgrade Prepare the network for a Freeze Upgrade operation

consensus dev-freeze freeze-upgrade Performs a Freeze Upgrade operation with on the network after it has been prepared with prepare-upgrade

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

deployment

deployment

Create, modify, and delete deployment configurations. Deployments are required for most of the other commands.

Commands:

deployment cluster View and manage Solo cluster references used by a deployment.

deployment config List, view, create, delete, and import deployments. These commands affect the local configuration only.

deployment diagnostics Capture diagnostic information such as logs, signed states, and ledger/network/node configurations.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

deployment cluster

deployment cluster

View and manage Solo cluster references used by a deployment.

Commands:

deployment cluster attach Attaches a cluster reference to a deployment.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

deployment config

deployment config

List, view, create, delete, and import deployments. These commands affect the local configuration only.

Commands:

deployment config list Lists all local deployment configurations or deployments in a specific cluster.

deployment config create Creates a new local deployment configuration.

deployment config delete Removes a local deployment configuration.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

deployment diagnostics

deployment diagnostics

Capture diagnostic information such as logs, signed states, and ledger/network/node configurations.

Commands:

deployment diagnostics all Captures logs, configs, and diagnostic artifacts from all consensus nodes and test connections.

deployment diagnostics connections Tests connections to Consensus, Relay, Explorer, Mirror and Block nodes.

deployment diagnostics logs Get logs and configuration files from consensus node/nodes.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

explorer

explorer

Explorer Node operations for creating, modifying, and destroying resources.These commands require the presence of an existing deployment.

Commands:

explorer node List, create, manage, or destroy explorer node instances. Operates on a single explorer node instance at a time.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

explorer node

explorer node

List, create, manage, or destroy explorer node instances. Operates on a single explorer node instance at a time.

Commands:

explorer node add Adds and configures a new node instance.

explorer node destroy Deletes the specified node from the deployment.

explorer node upgrade Upgrades the specified node in the deployment.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

keys

keys

Consensus key generation operations

Commands:

keys consensus Generate unique cryptographic keys (gossip or grpc TLS keys) for the Consensus Node instances.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

keys consensus

keys consensus

Generate unique cryptographic keys (gossip or grpc TLS keys) for the Consensus Node instances.

Commands:

keys consensus generate Generates TLS keys required for consensus node communication.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

ledger

ledger

System, Account, and Crypto ledger-based management operations. These commands require an operational set of consensus nodes and may require an operational mirror node.

Commands:

ledger system Perform a full ledger initialization on a new deployment, rekey privileged/system accounts, or setup network staking parameters.

ledger account View, list, create, update, delete, and import ledger accounts.

ledger file Upload or update files on the Hiero network.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

ledger system

ledger system

Perform a full ledger initialization on a new deployment, rekey privileged/system accounts, or setup network staking parameters.

Commands:

ledger system init Re-keys ledger system accounts and consensus node admin keys with uniquely generated ED25519 private keys and will stake consensus nodes.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

ledger account

ledger account

View, list, create, update, delete, and import ledger accounts.

Commands:

ledger account update Updates an existing ledger account.

ledger account create Creates a new ledger account.

ledger account info Gets the account info including the current amount of HBAR

ledger account predefined Creates predefined accounts used by one-shot deployments.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

ledger file

ledger file

Upload or update files on the Hiero network.

Commands:

ledger file create Create a new file on the Hiero network

ledger file update Update an existing file on the Hiero network

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

mirror

mirror

Mirror Node operations for creating, modifying, and destroying resources. These commands require the presence of an existing deployment.

Commands:

mirror node List, create, manage, or destroy mirror node instances. Operates on a single mirror node instance at a time.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

mirror node

mirror node

List, create, manage, or destroy mirror node instances. Operates on a single mirror node instance at a time.

Commands:

mirror node add Adds and configures a new node instance.

mirror node destroy Deletes the specified node from the deployment.

mirror node upgrade Upgrades the specified node from the deployment.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

relay

relay

RPC Relay Node operations for creating, modifying, and destroying resources. These commands require the presence of an existing deployment.

Commands:

relay node List, create, manage, or destroy relay node instances. Operates on a single relay node instance at a time.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

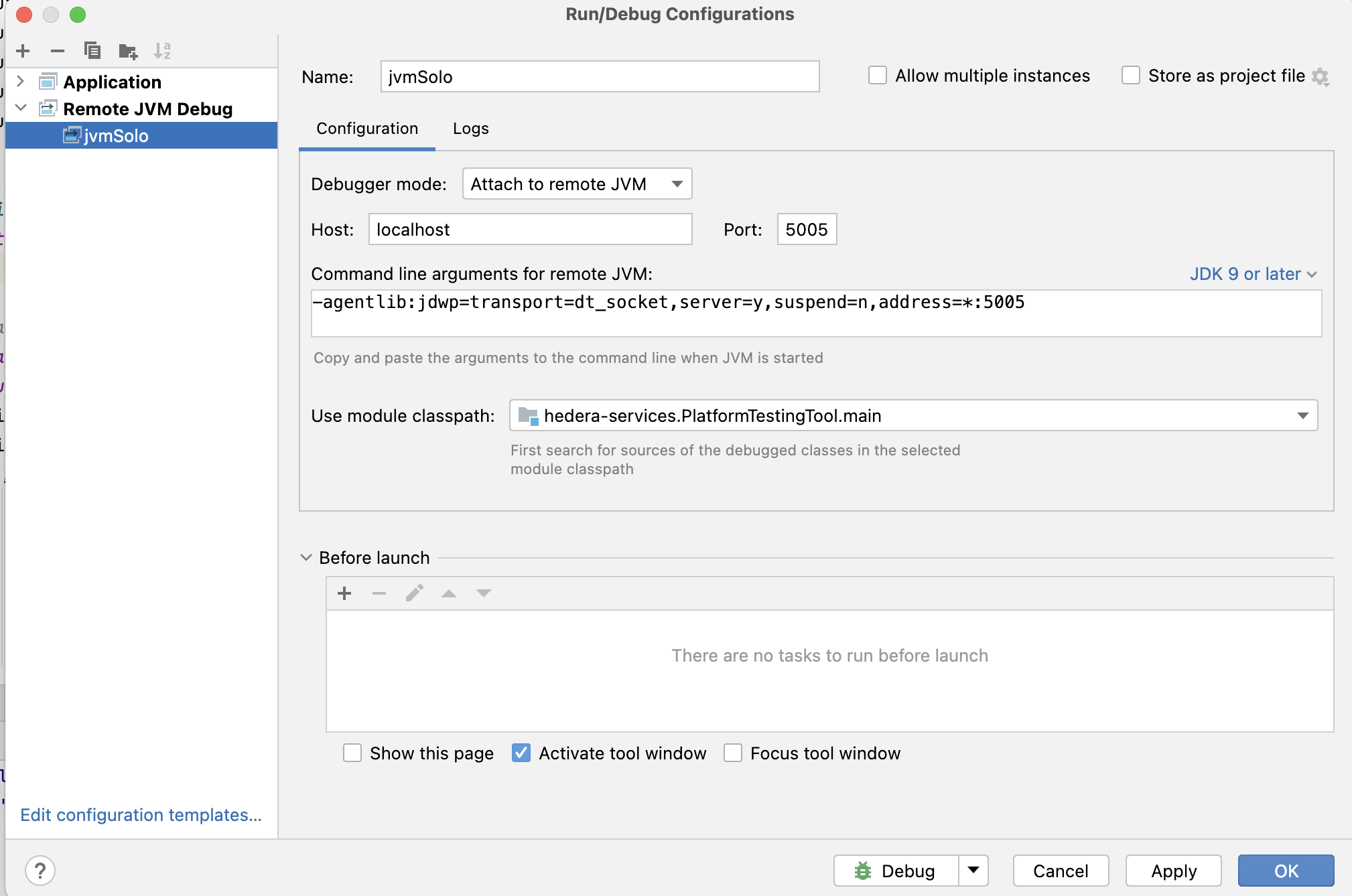

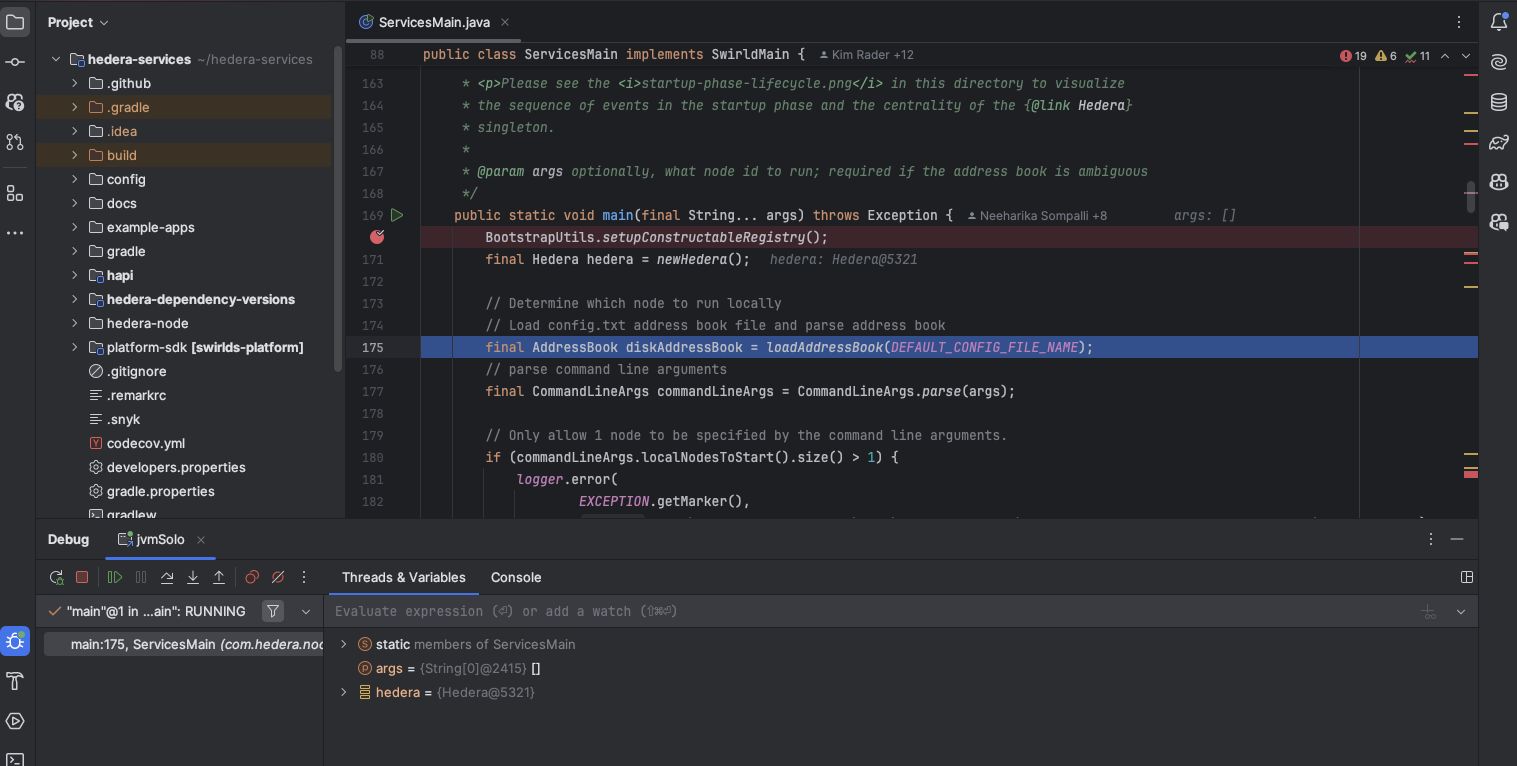

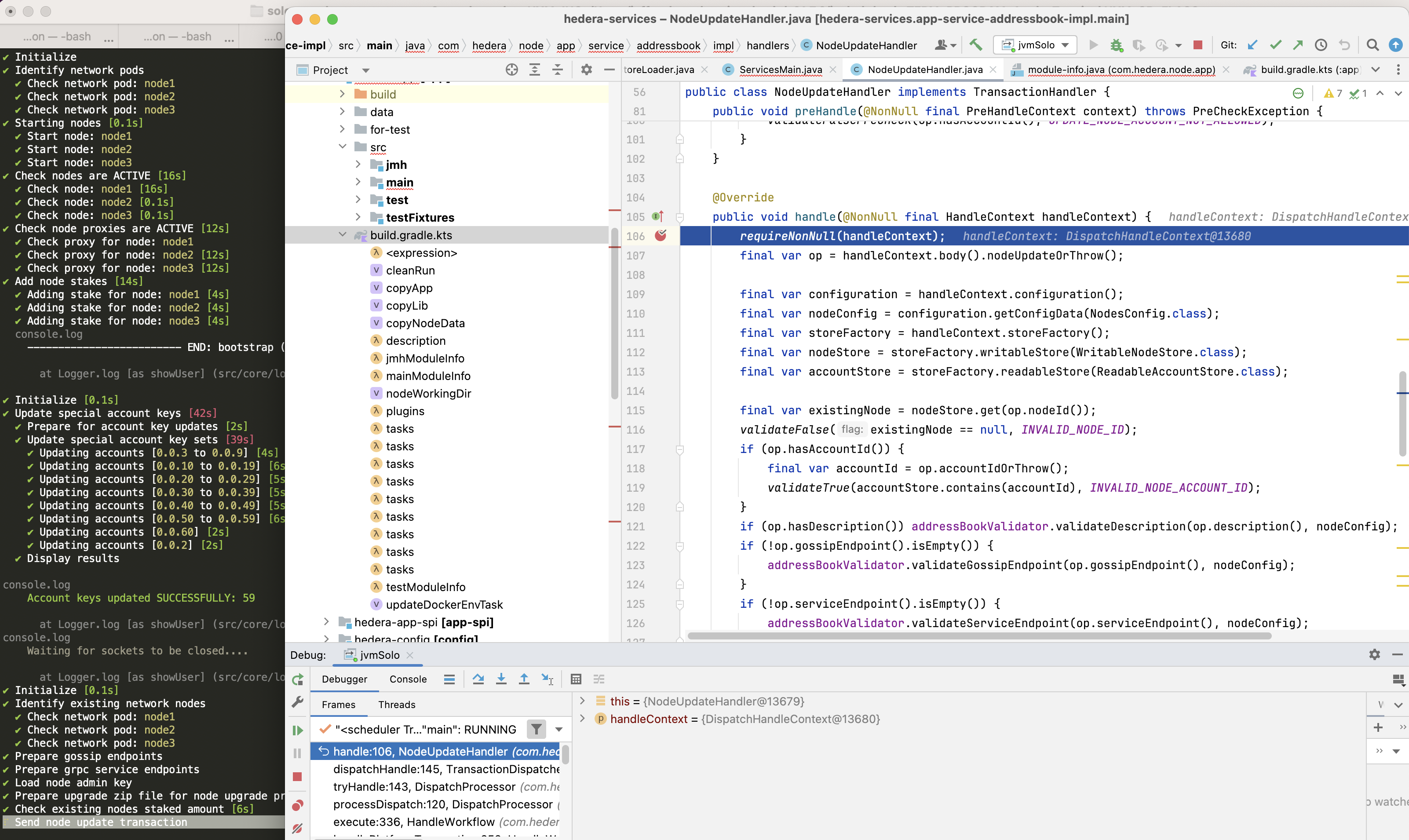

the network services