The documentation section provides a comprehensive guide to using Solo to launch a Hiero Consensus Node network, including setup instructions, usage guides, and information for developers. It covers everything from installation to advanced features and troubleshooting.

This is the multi-page printable view of this section. Click here to print.

Documentation

- 1: Getting Started

- 2: Step By Step Guide

- 3: Solo CLI User Manual

- 4: Solo CLI Commands

- 5: FAQ

- 6: Using Solo with Mirror Node

- 7: Using Solo with Hiero JavaScript SDK

- 8: Hiero Consensus Node Platform Developer

- 9: Hiero Consensus Node Execution Developer

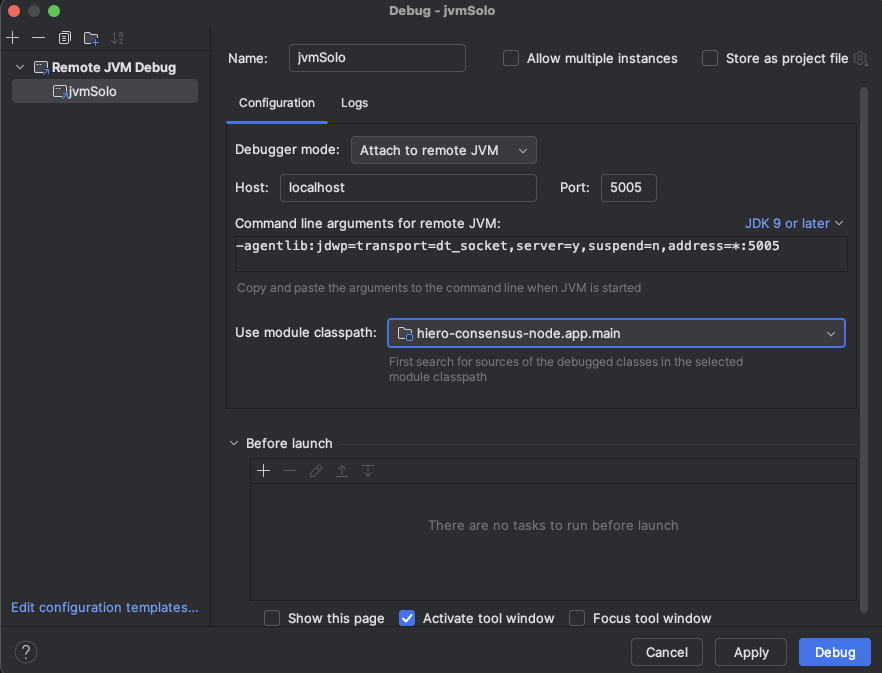

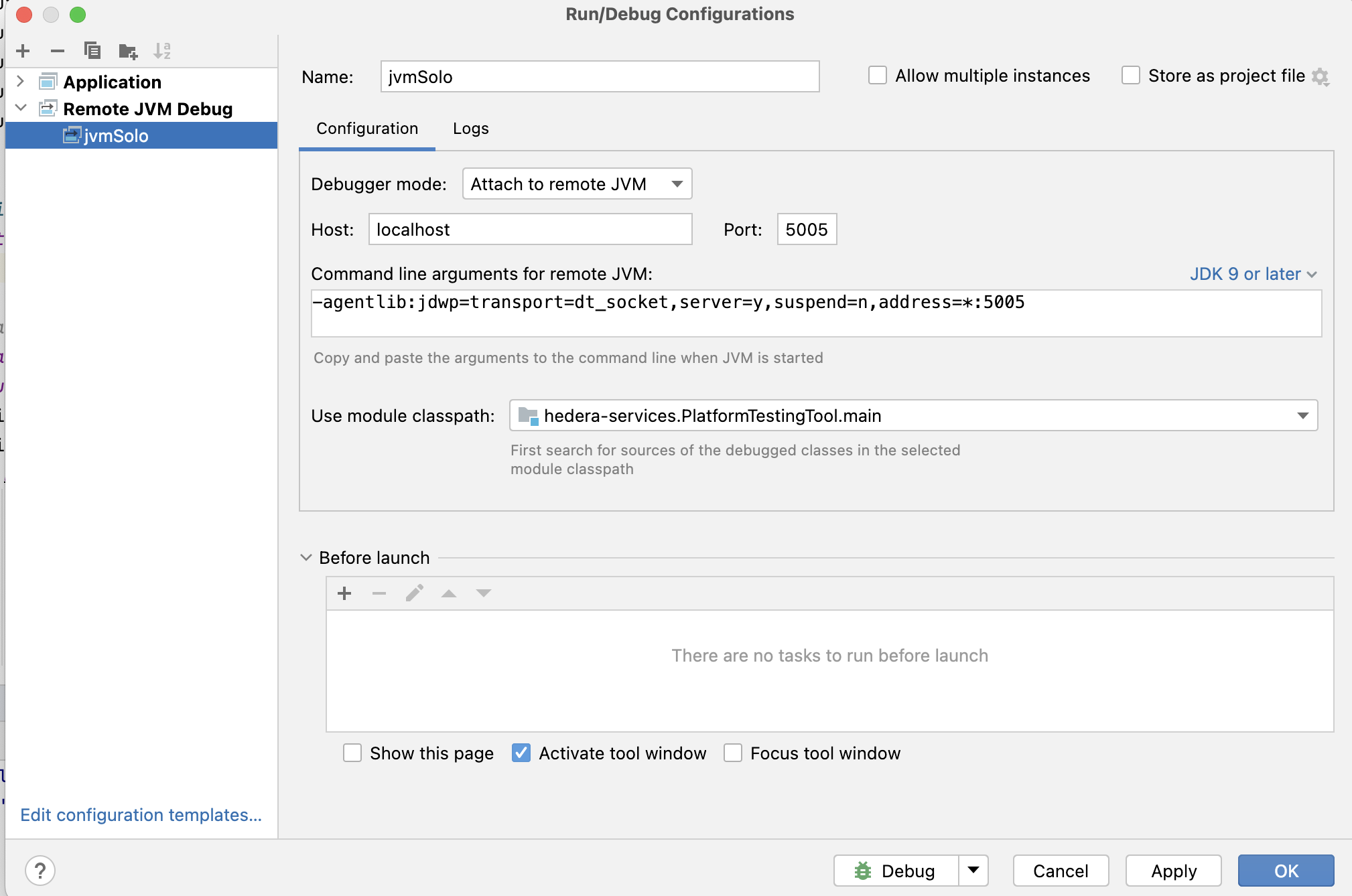

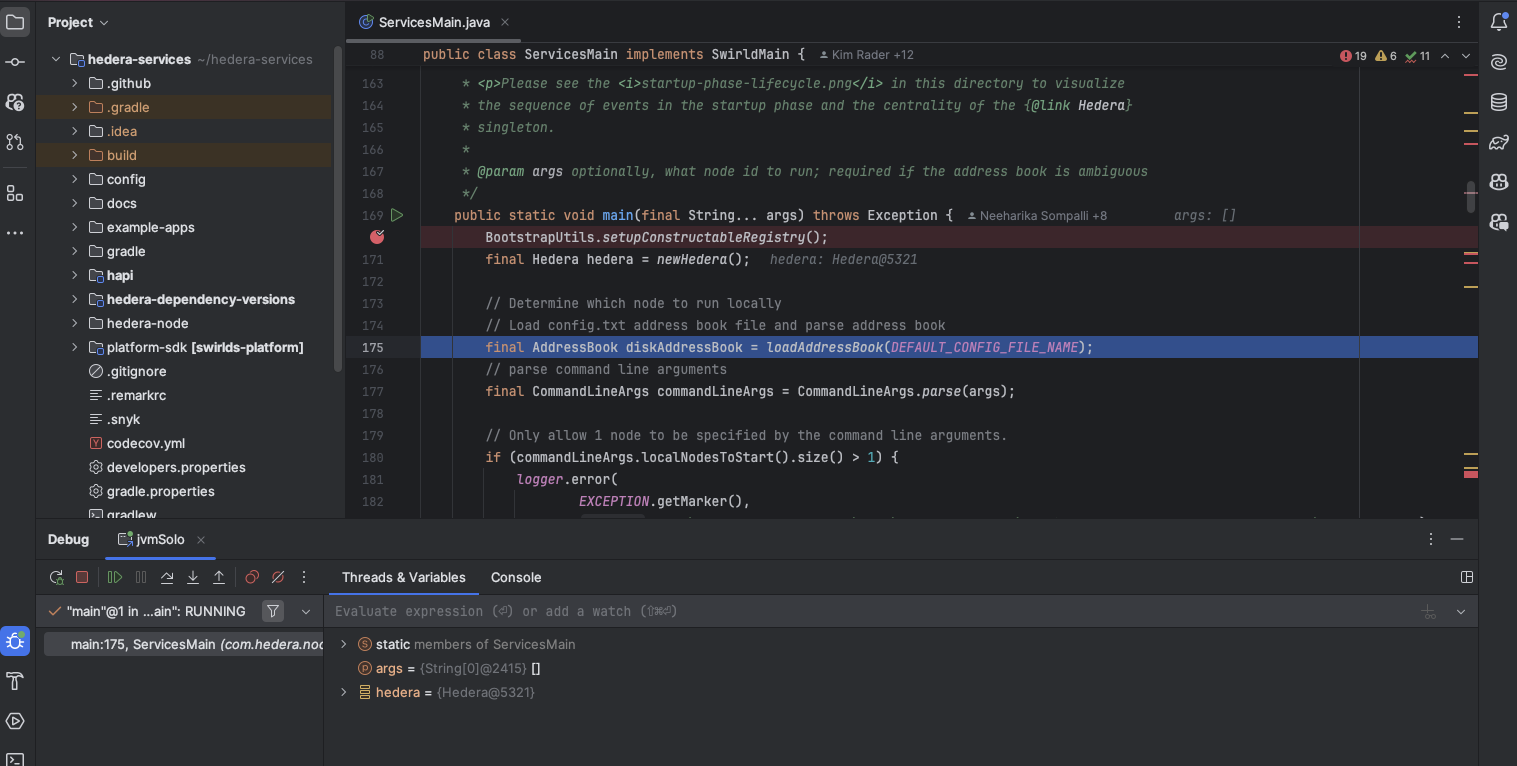

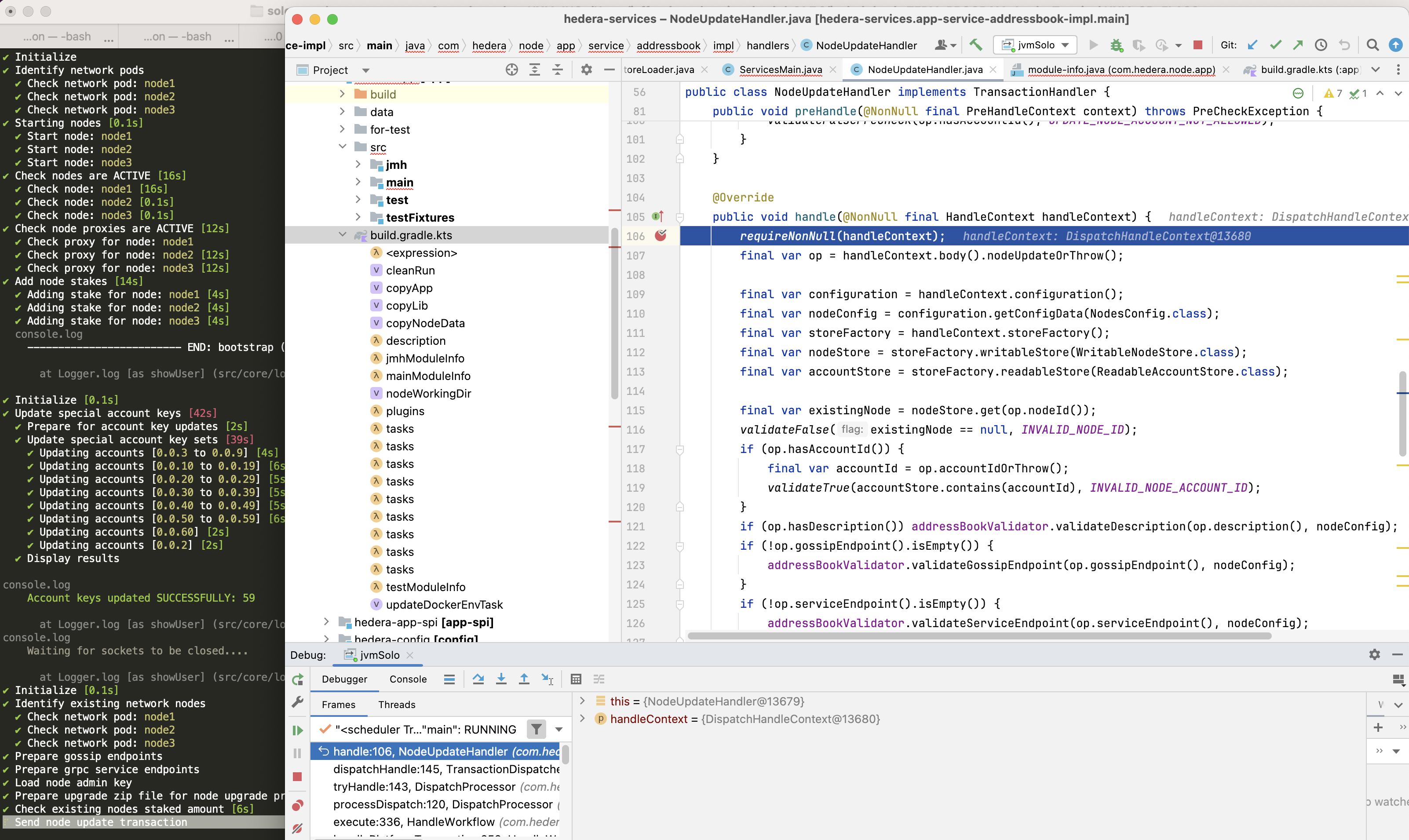

- 10: Attach JVM Debugger and Retrieve Logs

- 11: Using Environment Variables

1 - Getting Started

[!WARNING]

Any version of Solo prior to

v0.35.3will fail on Apple M3/M4 chipsets due to a known issue with Java 21 and these chipsets.

Solo

An opinionated CLI tool to deploy and manage standalone test networks.

Requirements

| Solo Version | Node.js | Kind | Solo Chart | Hedera | Kubernetes | Kubectl | Helm | k9s | Docker Resources |

|---|---|---|---|---|---|---|---|---|---|

| 0.29.0 | >= 20.14.0 (lts/hydrogen) | >= v1.29.1 | v0.30.0 | v0.53.0 – <= v0.57.0 | >= v1.27.3 | >= v1.27.3 | v3.14.2 | >= v0.27.4 | Memory >= 8GB, CPU >= 4 |

| 0.30.0 | >= 20.14.0 (lts/hydrogen) | >= v1.29.1 | v0.30.0 | v0.54.0 – <= v0.57.0 | >= v1.27.3 | >= v1.27.3 | v3.14.2 | >= v0.27.4 | Memory >= 8GB, CPU >= 4 |

| 0.31.4 | >= 20.18.0 (lts/iron) | >= v1.29.1 | v0.31.4 | v0.54.0 – <= v0.57.0 | >= v1.27.3 | >= v1.27.3 | v3.14.2 | >= v0.27.4 | Memory >= 8GB, CPU >= 4 |

| 0.32.0 | >= 20.18.0 (lts/iron) | >= v1.29.1 | v0.38.2 | v0.58.1 - <= v0.59.0 | >= v1.27.3 | >= v1.27.3 | v3.14.2 | >= v0.27.4 | Memory >= 8GB, CPU >= 4 |

| 0.33.0 | >= 20.18.0 (lts/iron) | >= v1.29.1 | v0.38.2 | v0.58.1 - <= v0.59.0 | >= v1.27.3 | >= v1.27.3 | v3.14.2 | >= v0.27.4 | Memory >= 8GB, CPU >= 4 |

| 0.34.0 | >= 20.18.0 (lts/iron) | >= v1.29.1 | v0.42.10 | v0.58.1+ | >= v1.27.3 | >= v1.27.3 | v3.14.2 | >= v0.27.4 | Memory >= 8GB, CPU >= 4 |

| 0.35.0 | >= 20.18.0 (lts/iron) | >= v1.29.1 | v0.44.0 | v0.58.1+ | >= v1.27.3 | >= v1.27.3 | v3.14.2 | >= v0.27.4 | Memory >= 8GB, CPU >= 4 |

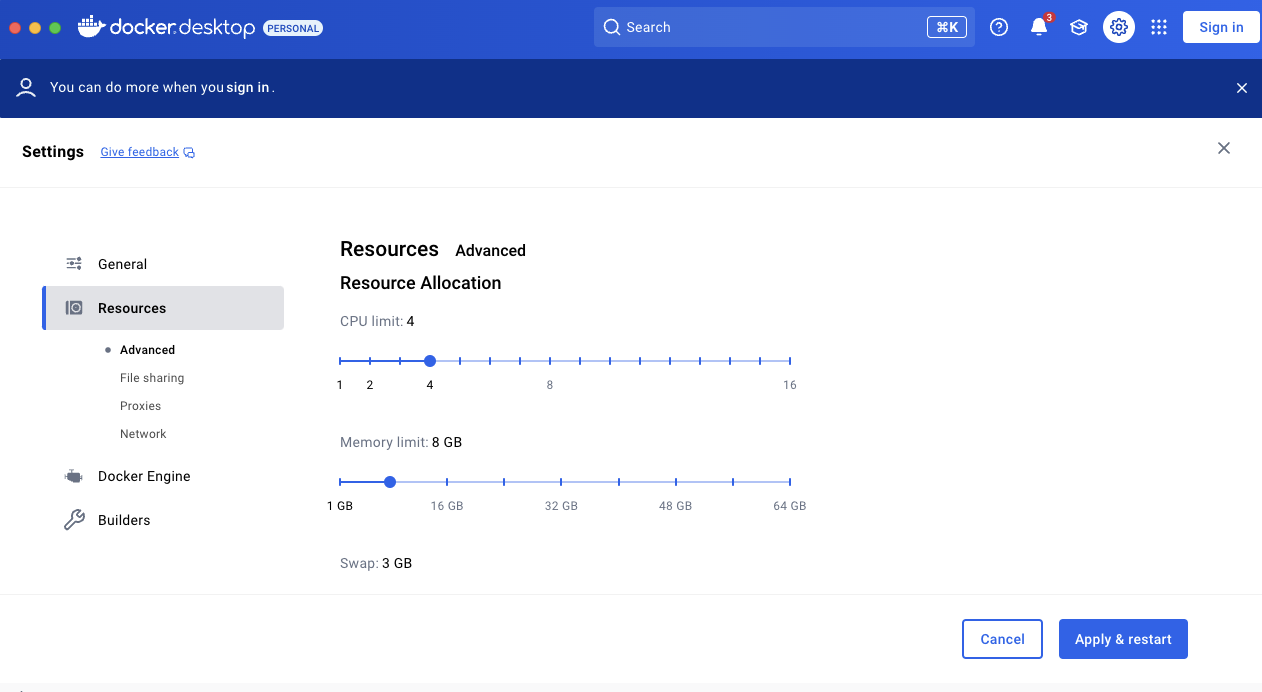

Hardware Requirements

To run a three-node network, you will need to set up Docker Desktop with at least 8GB of memory and 4 CPUs.

Setup

# install specific nodejs version

# nvm install <version>

# install nodejs version 20.18.0

nvm install v20.18.0

# lists available node versions already installed

nvm ls

# swith to selected node version

# nvm use <version>

nvm use v20.18.0

Install Solo

- Run

npm install -g @hashgraph/solo

Documentation

Contributing

Contributions are welcome. Please see the contributing guide to see how you can get involved.

Code of Conduct

This project is governed by the Contributor Covenant Code of Conduct. By participating, you are expected to uphold this code of conduct.

License

2 - Step By Step Guide

Solo User Guide

Table of Contents

- Setup Kubernetes cluster

- Step by Step Instructions

- Initialize solo directories

- Generate pem formatted node keys

- Create a deployment in the specified clusters

- Setup cluster with shared components

- Create a solo deployment

- Deploy helm chart with Hedera network components

- Setup node with Hedera platform software

- Deploy mirror node

- Deploy explorer mode

- Deploy a JSON RPC relay

- Execution Developer

- Destroy relay node

- Destroy mirror node

- Destroy explorer node

- Destroy network

For those who would like to have more control or need some customized setups, here are some step by step instructions of how to setup and deploy a solo network.

Setup Kubernetes cluster

Remote cluster

- You may use remote kubernetes cluster. In this case, ensure kubernetes context is set up correctly.

kubectl config use-context <context-name>

Local cluster

- You may use kind or microk8s to create a cluster. In this case,

ensure your Docker engine has enough resources (e.g. Memory >=8Gb, CPU: >=4). Below we show how you can use

kindto create a cluster

First, use the following command to set up the environment variables:

export SOLO_CLUSTER_NAME=solo

export SOLO_NAMESPACE=solo

export SOLO_CLUSTER_SETUP_NAMESPACE=solo-cluster

export SOLO_DEPLOYMENT=solo-deployment

Then run the following command to set the kubectl context to the new cluster:

kind create cluster -n "${SOLO_CLUSTER_NAME}"

Example output

Creating cluster "solo-e2e" ...

• Ensuring node image (kindest/node:v1.32.2) 🖼 ...

✓ Ensuring node image (kindest/node:v1.32.2) 🖼

• Preparing nodes 📦 ...

✓ Preparing nodes 📦

• Writing configuration 📜 ...

✓ Writing configuration 📜

• Starting control-plane 🕹️ ...

✓ Starting control-plane 🕹️

• Installing CNI 🔌 ...

✓ Installing CNI 🔌

• Installing StorageClass 💾 ...

✓ Installing StorageClass 💾

Set kubectl context to "kind-solo-e2e"

You can now use your cluster with:

kubectl cluster-info --context kind-solo-e2e

Have a nice day! 👋

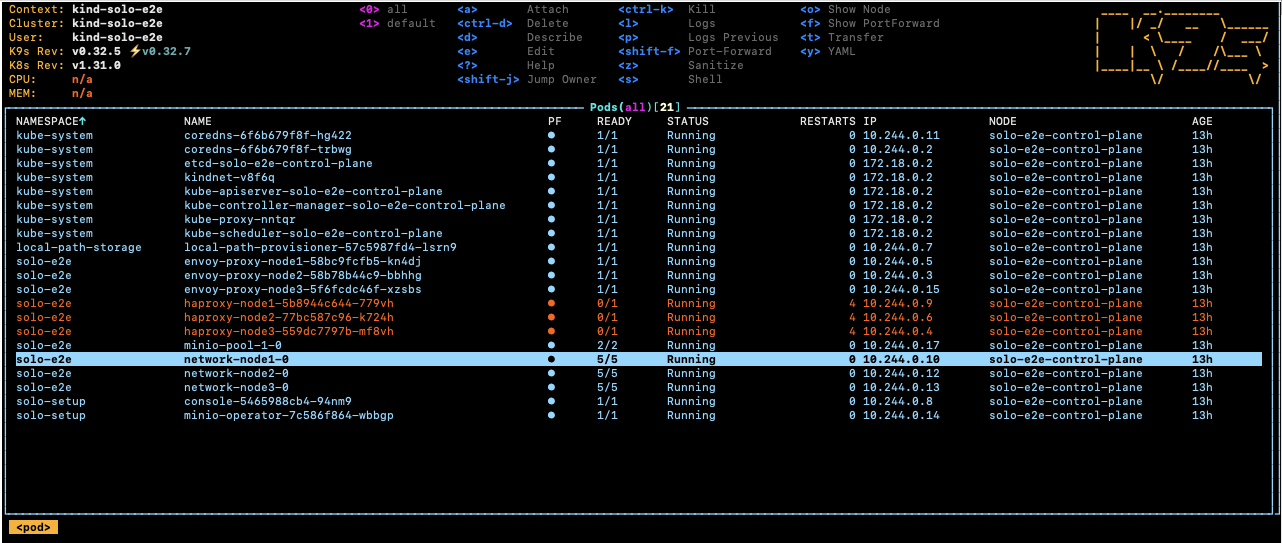

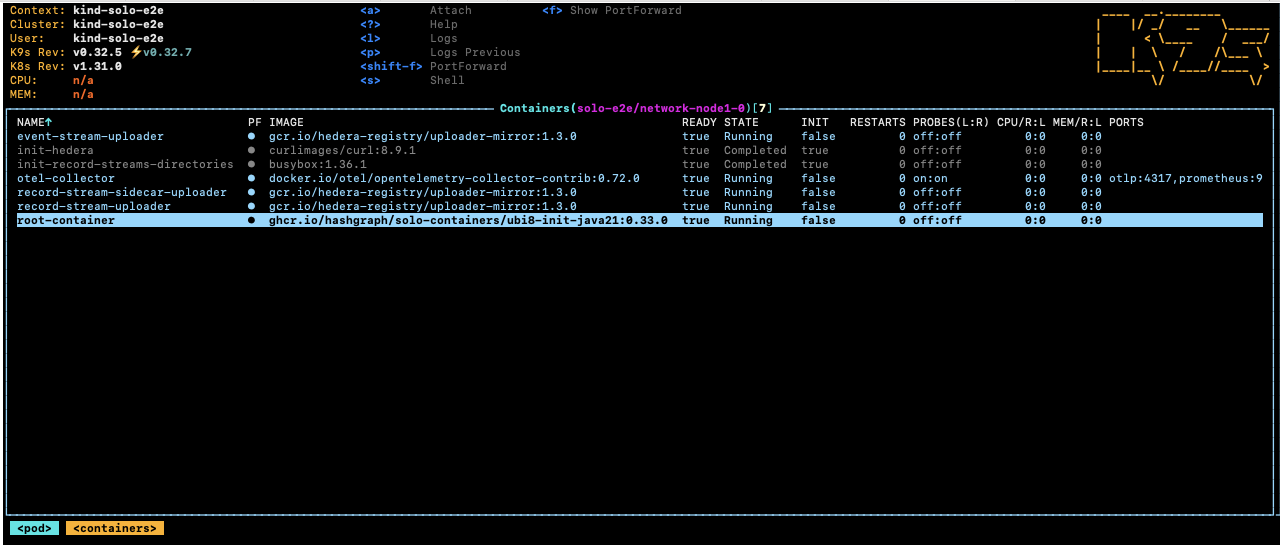

You may now view pods in your cluster using k9s -A as below:

Context: kind-solo <0> all <a> Attach <ctr… ____ __.________

Cluster: kind-solo <ctrl-d> Delete <l> | |/ _/ __ \______

User: kind-solo <d> Describe <p> | < \____ / ___/

K9s Rev: v0.32.5 <e> Edit <shif| | \ / /\___ \

K8s Rev: v1.27.3 <?> Help <z> |____|__ \ /____//____ >

CPU: n/a <shift-j> Jump Owner <s> \/ \/

MEM: n/a

┌───────────────────────────────────────────────── Pods(all)[11] ─────────────────────────────────────────────────┐

│ NAMESPACE↑ NAME PF READY STATUS RESTARTS IP NODE │

│ solo-setup console-557956d575-4r5xm ● 1/1 Running 0 10.244.0.5 solo-con │

│ solo-setup minio-operator-7d575c5f84-8shc9 ● 1/1 Running 0 10.244.0.6 solo-con │

│ kube-system coredns-5d78c9869d-6cfbg ● 1/1 Running 0 10.244.0.4 solo-con │

│ kube-system coredns-5d78c9869d-gxcjz ● 1/1 Running 0 10.244.0.3 solo-con │

│ kube-system etcd-solo-control-plane ● 1/1 Running 0 172.18.0.2 solo-con │

│ kube-system kindnet-k75z6 ● 1/1 Running 0 172.18.0.2 solo-con │

│ kube-system kube-apiserver-solo-control-plane ● 1/1 Running 0 172.18.0.2 solo-con │

│ kube-system kube-controller-manager-solo-control-plane ● 1/1 Running 0 172.18.0.2 solo-con │

│ kube-system kube-proxy-cct7t ● 1/1 Running 0 172.18.0.2 solo-con │

│ kube-system kube-scheduler-solo-control-plane ● 1/1 Running 0 172.18.0.2 solo-con │

│ local-path-storage local-path-provisioner-6bc4bddd6b-gwdp6 ● 1/1 Running 0 10.244.0.2 solo-con │

│ │

│ │

└─────────────────────────────────────────────────────────────────────────────────────────────────────────────────┘

Step by Step Instructions

Initialize solo directories:

# reset .solo directory

rm -rf ~/.solo

solo init

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : init

**********************************************************************************

❯ Setup home directory and cache

✔ Setup home directory and cache

❯ Check dependencies

❯ Check dependency: helm [OS: darwin, Release: 23.6.0, Arch: arm64]

✔ Check dependency: helm [OS: darwin, Release: 23.6.0, Arch: arm64]

✔ Check dependencies

❯ Create local configuration

↓ Create local configuration [SKIPPED: Create local configuration]

❯ Setup chart manager

push repo hedera-json-rpc-relay -> https://hiero-ledger.github.io/hiero-json-rpc-relay/charts

push repo mirror -> https://hashgraph.github.io/hedera-mirror-node/charts

push repo haproxy-ingress -> https://haproxy-ingress.github.io/charts

✔ Setup chart manager

❯ Copy templates in '/Users/user/.solo/cache'

***************************************************************************************

Note: solo stores various artifacts (config, logs, keys etc.) in its home directory: /Users/user/.solo

If a full reset is needed, delete the directory or relevant sub-directories before running 'solo init'.

***************************************************************************************

✔ Copy templates in '/Users/user/.solo/cache'

Create a deployment in the specified clusters, generate RemoteConfig and LocalConfig objects.

- Associates a cluster reference to a k8s context

solo cluster-ref connect --cluster-ref kind-${SOLO_CLUSTER_SETUP_NAMESPACE} --context kind-${SOLO_CLUSTER_NAME}

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : cluster-ref connect --cluster-ref kind-solo-e2e --context kind-solo-e2e

**********************************************************************************

❯ Initialize

✔ Initialize

❯ Validating cluster ref:

✔ kind-solo-e2e

❯ Test connection to cluster:

✔ Test connection to cluster: kind-solo-e2e

❯ Associate a context with a cluster reference:

✔ Associate a context with a cluster reference: kind-solo-e2e

- Create a deployment

solo deployment create -n "${SOLO_NAMESPACE}" --deployment "${SOLO_DEPLOYMENT}"

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : deployment create --namespace solo --deployment solo-deployment --realm 0 --shard 0

Kubernetes Namespace : solo

**********************************************************************************

❯ Initialize

✔ Initialize

❯ Add deployment to local config

✔ Adding deployment: solo-deployment with namespace: solo to local config

- Add a cluster to deployment

solo deployment add-cluster --deployment "${SOLO_DEPLOYMENT}" --cluster-ref kind-${SOLO_CLUSTER_SETUP_NAMESPACE} --num-consensus-nodes 3

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : deployment add-cluster --deployment solo-deployment --cluster-ref kind-solo-e2e --num-consensus-nodes 3

**********************************************************************************

❯ Initialize

✔ Initialize

❯ Verify args

✔ Verify args

❯ check network state

✔ check network state

❯ Test cluster connection

✔ Test cluster connection: kind-solo-e2e, context: kind-solo-e2e

❯ Verify prerequisites

✔ Verify prerequisites

❯ add cluster-ref in local config deployments

✔ add cluster-ref: kind-solo-e2e for deployment: solo-deployment in local config

❯ create remote config for deployment

✔ create remote config for deployment: solo-deployment in cluster: kind-solo-e2e

Generate pem formatted node keys

solo node keys --gossip-keys --tls-keys -i node1,node2,node3 --deployment "${SOLO_DEPLOYMENT}"

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : node keys --gossip-keys --tls-keys --node-aliases node1,node2,node3 --deployment solo-deployment

**********************************************************************************

❯ Initialize

✔ Initialize

❯ Generate gossip keys

❯ Backup old files

✔ Backup old files

❯ Gossip key for node: node1

✔ Gossip key for node: node1

❯ Gossip key for node: node2

✔ Gossip key for node: node2

❯ Gossip key for node: node3

✔ Gossip key for node: node3

✔ Generate gossip keys

❯ Generate gRPC TLS Keys

❯ Backup old files

❯ TLS key for node: node1

❯ TLS key for node: node2

❯ TLS key for node: node3

✔ Backup old files

✔ TLS key for node: node3

✔ TLS key for node: node2

✔ TLS key for node: node1

✔ Generate gRPC TLS Keys

❯ Finalize

✔ Finalize

PEM key files are generated in ~/.solo/cache/keys directory.

hedera-node1.crt hedera-node3.crt s-private-node1.pem s-public-node1.pem unused-gossip-pem

hedera-node1.key hedera-node3.key s-private-node2.pem s-public-node2.pem unused-tls

hedera-node2.crt hedera-node4.crt s-private-node3.pem s-public-node3.pem

hedera-node2.key hedera-node4.key s-private-node4.pem s-public-node4.pem

Setup cluster with shared components

solo cluster-ref setup -s "${SOLO_CLUSTER_SETUP_NAMESPACE}"

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : cluster-ref setup --cluster-setup-namespace solo-cluster

**********************************************************************************

❯ Initialize

✔ Initialize

❯ Prepare chart values

✔ Prepare chart values

❯ Install 'solo-cluster-setup' chart

********************** Installed solo-cluster-setup chart **********************

Version : 0.50.0

********************************************************************************

✔ Install 'solo-cluster-setup' chart

In a separate terminal, you may run k9s to view the pod status.

Deploy helm chart with Hedera network components

It may take a while (5~15 minutes depending on your internet speed) to download various docker images and get the pods started.

If it fails, ensure you have enough resources allocated for Docker engine and retry the command.

solo network deploy -i node1,node2,node3 --deployment "${SOLO_DEPLOYMENT}"

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : network deploy --node-aliases node1,node2,node3 --deployment solo-deployment

**********************************************************************************

❯ Initialize

❯ Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize

❯ Copy gRPC TLS Certificates

↓ Copy gRPC TLS Certificates [SKIPPED: Copy gRPC TLS Certificates]

❯ Check if cluster setup chart is installed

✔ Check if cluster setup chart is installed

❯ Prepare staging directory

❯ Copy Gossip keys to staging

✔ Copy Gossip keys to staging

❯ Copy gRPC TLS keys to staging

✔ Copy gRPC TLS keys to staging

✔ Prepare staging directory

❯ Copy node keys to secrets

❯ Copy TLS keys

❯ Node: node1, cluster: kind-solo-e2e

❯ Node: node2, cluster: kind-solo-e2e

❯ Node: node3, cluster: kind-solo-e2e

❯ Copy Gossip keys

❯ Copy Gossip keys

❯ Copy Gossip keys

✔ Copy Gossip keys

✔ Node: node1, cluster: kind-solo-e2e

✔ Copy Gossip keys

✔ Node: node3, cluster: kind-solo-e2e

✔ Copy Gossip keys

✔ Node: node2, cluster: kind-solo-e2e

✔ Copy TLS keys

✔ Copy node keys to secrets

❯ Install chart 'solo-deployment'

*********************** Installed solo-deployment chart ************************

Version : 0.50.0

********************************************************************************

✔ Install chart 'solo-deployment'

❯ Check for load balancer

↓ Check for load balancer [SKIPPED: Check for load balancer]

❯ Redeploy chart with external IP address config

↓ Redeploy chart with external IP address config [SKIPPED: Redeploy chart with external IP address config]

❯ Check node pods are running

❯ Check Node: node1, Cluster: kind-solo-e2e

✔ Check Node: node1, Cluster: kind-solo-e2e

❯ Check Node: node2, Cluster: kind-solo-e2e

✔ Check Node: node2, Cluster: kind-solo-e2e

❯ Check Node: node3, Cluster: kind-solo-e2e

✔ Check Node: node3, Cluster: kind-solo-e2e

✔ Check node pods are running

❯ Check proxy pods are running

❯ Check HAProxy for: node1, cluster: kind-solo-e2e

❯ Check HAProxy for: node2, cluster: kind-solo-e2e

❯ Check HAProxy for: node3, cluster: kind-solo-e2e

❯ Check Envoy Proxy for: node1, cluster: kind-solo-e2e

❯ Check Envoy Proxy for: node2, cluster: kind-solo-e2e

❯ Check Envoy Proxy for: node3, cluster: kind-solo-e2e

✔ Check HAProxy for: node3, cluster: kind-solo-e2e

✔ Check Envoy Proxy for: node1, cluster: kind-solo-e2e

✔ Check HAProxy for: node1, cluster: kind-solo-e2e

✔ Check Envoy Proxy for: node2, cluster: kind-solo-e2e

✔ Check Envoy Proxy for: node3, cluster: kind-solo-e2e

✔ Check HAProxy for: node2, cluster: kind-solo-e2e

✔ Check proxy pods are running

❯ Check auxiliary pods are ready

❯ Check MinIO

✔ Check MinIO

✔ Check auxiliary pods are ready

❯ Add node and proxies to remote config

✔ Add node and proxies to remote config

Setup node with Hedera platform software.

- It may take a while as it download the hedera platform code from https://builds.hedera.com/

solo node setup -i node1,node2,node3 --deployment "${SOLO_DEPLOYMENT}"

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : node setup --node-aliases node1,node2,node3 --deployment solo-deployment

**********************************************************************************

❯ Initialize

❯ Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize

❯ Validate nodes states

❯ Validating state for node node1

✔ Validating state for node node1 - valid state: requested

❯ Validating state for node node2

✔ Validating state for node node2 - valid state: requested

❯ Validating state for node node3

✔ Validating state for node node3 - valid state: requested

✔ Validate nodes states

❯ Identify network pods

❯ Check network pod: node1

❯ Check network pod: node2

❯ Check network pod: node3

✔ Check network pod: node1

✔ Check network pod: node3

✔ Check network pod: node2

✔ Identify network pods

❯ Fetch platform software into network nodes

❯ Update node: node1 [ platformVersion = v0.59.5, context = kind-solo-e2e ]

❯ Update node: node2 [ platformVersion = v0.59.5, context = kind-solo-e2e ]

❯ Update node: node3 [ platformVersion = v0.59.5, context = kind-solo-e2e ]

✔ Update node: node2 [ platformVersion = v0.59.5, context = kind-solo-e2e ]

✔ Update node: node1 [ platformVersion = v0.59.5, context = kind-solo-e2e ]

✔ Update node: node3 [ platformVersion = v0.59.5, context = kind-solo-e2e ]

✔ Fetch platform software into network nodes

❯ Setup network nodes

❯ Node: node1

❯ Node: node2

❯ Node: node3

❯ Copy configuration files

❯ Copy configuration files

❯ Copy configuration files

✔ Copy configuration files

❯ Set file permissions

✔ Copy configuration files

❯ Set file permissions

✔ Copy configuration files

❯ Set file permissions

✔ Set file permissions

✔ Node: node2

✔ Set file permissions

✔ Node: node3

✔ Set file permissions

✔ Node: node1

✔ Setup network nodes

❯ Change node state to setup in remote config

✔ Change node state to setup in remote config

- Start the nodes

solo node start -i node1,node2,node3 --deployment "${SOLO_DEPLOYMENT}"

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : node start --node-aliases node1,node2,node3 --deployment solo-deployment

**********************************************************************************

❯ Initialize

❯ Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize

❯ Validate nodes states

❯ Validating state for node node1

✔ Validating state for node node1 - valid state: setup

❯ Validating state for node node2

✔ Validating state for node node2 - valid state: setup

❯ Validating state for node node3

✔ Validating state for node node3 - valid state: setup

✔ Validate nodes states

❯ Identify existing network nodes

❯ Check network pod: node1

❯ Check network pod: node2

❯ Check network pod: node3

✔ Check network pod: node1

✔ Check network pod: node2

✔ Check network pod: node3

✔ Identify existing network nodes

❯ Upload state files network nodes

↓ Upload state files network nodes [SKIPPED: Upload state files network nodes]

❯ Starting nodes

❯ Start node: node1

❯ Start node: node2

❯ Start node: node3

✔ Start node: node2

✔ Start node: node3

✔ Start node: node1

✔ Starting nodes

❯ Enable port forwarding for JVM debugger

↓ Enable port forwarding for JVM debugger [SKIPPED: Enable port forwarding for JVM debugger]

❯ Check all nodes are ACTIVE

❯ Check network pod: node1

❯ Check network pod: node2

❯ Check network pod: node3

✔ Check network pod: node1 - status ACTIVE, attempt: 17/300

✔ Check network pod: node3 - status ACTIVE, attempt: 17/300

✔ Check network pod: node2 - status ACTIVE, attempt: 18/300

✔ Check all nodes are ACTIVE

❯ Check node proxies are ACTIVE

❯ Check proxy for node: node1

✔ Check proxy for node: node1

❯ Check proxy for node: node2

✔ Check proxy for node: node2

❯ Check proxy for node: node3

✔ Check proxy for node: node3

✔ Check node proxies are ACTIVE

❯ Change node state to started in remote config

✔ Change node state to started in remote config

❯ Add node stakes

❯ Adding stake for node: node1

✔ Adding stake for node: node1

❯ Adding stake for node: node2

✔ Adding stake for node: node2

❯ Adding stake for node: node3

✔ Adding stake for node: node3

✔ Add node stakes

Deploy mirror node

solo mirror-node deploy --deployment "${SOLO_DEPLOYMENT}" --cluster-ref kind-${SOLO_CLUSTER_SETUP_NAMESPACE}

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : mirror-node deploy --deployment solo-deployment --cluster-ref kind-solo-e2e --quiet-mode

**********************************************************************************

❯ Initialize

❯ Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize

❯ Enable mirror-node

❯ Prepare address book

✔ Prepare address book

❯ Install mirror ingress controller

↓ Install mirror ingress controller [SKIPPED: Install mirror ingress controller]

❯ Deploy mirror-node

**************************** Installed mirror chart ****************************

Version : v0.126.0

********************************************************************************

✔ Deploy mirror-node

✔ Enable mirror-node

❯ Check pods are ready

❯ Check Postgres DB

❯ Check REST API

❯ Check GRPC

❯ Check Monitor

❯ Check Importer

✔ Check Postgres DB

✔ Check Importer

✔ Check GRPC

✔ Check REST API

✔ Check Monitor

✔ Check pods are ready

❯ Seed DB data

❯ Insert data in public.file_data

✔ Insert data in public.file_data

✔ Seed DB data

❯ Add mirror node to remote config

✔ Add mirror node to remote config

Deploy explorer mode

solo explorer deploy --deployment "${SOLO_DEPLOYMENT}" --cluster-ref kind-${SOLO_CLUSTER_SETUP_NAMESPACE}

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : explorer deploy --deployment solo-deployment --quiet-mode

**********************************************************************************

❯ Initialize

❯ Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize

❯ Load remote config

✔ Load remote config

❯ Install cert manager

↓ Install cert manager [SKIPPED: Install cert manager]

❯ Install explorer

*********************** Installed hiero-explorer chart ************************

Version : 24.12.1

********************************************************************************

✔ Install explorer

❯ Install explorer ingress controller

↓ Install explorer ingress controller [SKIPPED: Install explorer ingress controller]

❯ Check explorer pod is ready

✔ Check explorer pod is ready

❯ Check haproxy ingress controller pod is ready

↓ Check haproxy ingress controller pod is ready [SKIPPED: Check haproxy ingress controller pod is ready]

❯ Add explorer to remote config

*********************************** ERROR *****************************************

Explorer deployment failed: Error deploying explorer: Invalid cluster: undefined

***********************************************************************************

Deploy a JSON RPC relay

solo relay deploy -i node1,node2,node3 --deployment "${SOLO_DEPLOYMENT}"

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : relay deploy --node-aliases node1,node2,node3 --deployment solo-deployment

**********************************************************************************

❯ Initialize

❯ Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize

❯ Check chart is installed

✔ Check chart is installed

❯ Prepare chart values

✔ Prepare chart values

❯ Deploy JSON RPC Relay

******************* Installed relay-node1-node2-node3 chart ********************

Version : v0.67.0

********************************************************************************

✔ Deploy JSON RPC Relay

❯ Check relay is running

✔ Check relay is running

❯ Check relay is ready

✔ Check relay is ready

❯ Add relay component in remote config

✔ Add relay component in remote config

Execution Developer

Next: Execution Developer

Destroy relay node

solo relay destroy --deployment "${SOLO_DEPLOYMENT}"

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : relay destroy --node-aliases node1,node2,node3 --deployment solo-deployment

**********************************************************************************

❯ Initialize

❯ Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize

❯ Destroy JSON RPC Relay

*** Destroyed Relays ***

-------------------------------------------------------------------------------

- hiero-explorer [hiero-explorer-chart-24.12.1]

- mirror [hedera-mirror-0.126.0]

- solo-deployment [solo-deployment-0.50.0]

✔ Destroy JSON RPC Relay

❯ Remove relay component from remote config

✔ Remove relay component from remote config

Destroy mirror node

solo mirror-node destroy --deployment "${SOLO_DEPLOYMENT}"

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : mirror-node destroy --deployment solo-deployment --quiet-mode

**********************************************************************************

❯ Initialize

❯ Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize

❯ Destroy mirror-node

✔ Destroy mirror-node

❯ Delete PVCs

✔ Delete PVCs

❯ Uninstall mirror ingress controller

✔ Uninstall mirror ingress controller

❯ Remove mirror node from remote config

✔ Remove mirror node from remote config

Destroy explorer node

solo explorer destroy --deployment "${SOLO_DEPLOYMENT}"

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : explorer destroy --deployment solo-deployment --quiet-mode

**********************************************************************************

❯ Initialize

❯ Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize

❯ Load remote config

✔ Load remote config

❯ Destroy explorer

✔ Destroy explorer

❯ Uninstall explorer ingress controller

✔ Uninstall explorer ingress controller

❯ Remove explorer from remote config

*********************************** ERROR *****************************************

Explorer destruction failed: Error destroy explorer: Component mirrorNodeExplorer of type mirrorNodeExplorers not found while attempting to remove

***********************************************************************************

Destroy network

solo network destroy --deployment "${SOLO_DEPLOYMENT}"

- Example output

******************************* Solo *********************************************

Version : 0.36.0

Kubernetes Context : kind-solo-e2e

Kubernetes Cluster : kind-solo-e2e

Current Command : network destroy --deployment solo-deployment --quiet-mode

**********************************************************************************

❯ Initialize

❯ Acquire lock

✔ Acquire lock - lock acquired successfully, attempt: 1/10

✔ Initialize

❯ Remove deployment from local configuration

✔ Remove deployment from local configuration

❯ Running sub-tasks to destroy network

✔ Deleting the RemoteConfig configmap in namespace solo

You may view the list of pods using k9s as below:

Context: kind-solo <0> all <a> Attach <ctr… ____ __.________

Cluster: kind-solo <ctrl-d> Delete <l> | |/ _/ __ \______

User: kind-solo <d> Describe <p> | < \____ / ___/

K9s Rev: v0.32.5 <e> Edit <shif| | \ / /\___ \

K8s Rev: v1.27.3 <?> Help <z> |____|__ \ /____//____ >

CPU: n/a <shift-j> Jump Owner <s> \/ \/

MEM: n/a

┌───────────────────────────────────────────────── Pods(all)[31] ─────────────────────────────────────────────────┐

│ NAMESPACE↑ NAME PF READY STATUS RESTARTS I │

│ kube-system coredns-5d78c9869d-994t4 ● 1/1 Running 0 1 │

│ kube-system coredns-5d78c9869d-vgt4q ● 1/1 Running 0 1 │

│ kube-system etcd-solo-control-plane ● 1/1 Running 0 1 │

│ kube-system kindnet-q26c9 ● 1/1 Running 0 1 │

│ kube-system kube-apiserver-solo-control-plane ● 1/1 Running 0 1 │

│ kube-system kube-controller-manager-solo-control-plane ● 1/1 Running 0 1 │

│ kube-system kube-proxy-9b27j ● 1/1 Running 0 1 │

│ kube-system kube-scheduler-solo-control-plane ● 1/1 Running 0 1 │

│ local-path-storage local-path-provisioner-6bc4bddd6b-4mv8c ● 1/1 Running 0 1 │

│ solo envoy-proxy-node1-65f8879dcc-rwg97 ● 1/1 Running 0 1 │

│ solo envoy-proxy-node2-667f848689-628cx ● 1/1 Running 0 1 │

│ solo envoy-proxy-node3-6bb4b4cbdf-dmwtr ● 1/1 Running 0 1 │

│ solo solo-deployment-grpc-75bb9c6c55-l7kvt ● 1/1 Running 0 1 │

│ solo solo-deployment-hiero-explorer-6565ccb4cb-9dbw2 ● 1/1 Running 0 1 │

│ solo solo-deployment-importer-dd74fd466-vs4mb ● 1/1 Running 0 1 │

│ solo solo-deployment-monitor-54b8f57db9-fn5qq ● 1/1 Running 0 1 │

│ solo solo-deployment-postgres-postgresql-0 ● 1/1 Running 0 1 │

│ solo solo-deployment-redis-node-0 ● 2/2 Running 0 1 │

│ solo solo-deployment-rest-6d48f8dbfc-plbp2 ● 1/1 Running 0 1 │

│ solo solo-deployment-restjava-5d6c4cb648-r597f ● 1/1 Running 0 1 │

│ solo solo-deployment-web3-55fdfbc7f7-lzhfl ● 1/1 Running 0 1 │

│ solo haproxy-node1-785b9b6f9b-676mr ● 1/1 Running 1 1 │

│ solo haproxy-node2-644b8c76d-v9mg6 ● 1/1 Running 1 1 │

│ solo haproxy-node3-fbffdb64-272t2 ● 1/1 Running 1 1 │

│ solo minio-pool-1-0 ● 2/2 Running 1 1 │

│ solo network-node1-0 ● 5/5 Running 2 1 │

│ solo network-node2-0 ● 5/5 Running 2 1 │

│ solo network-node3-0 ● 5/5 Running 2 1 │

│ solo relay-node1-node2-node3-hedera-json-rpc-relay-ddd4c8d8b-hdlpb ● 1/1 Running 0 1 │

│ solo-cluster console-557956d575-c5qp7 ● 1/1 Running 0 1 │

│ solo-cluster minio-operator-7d575c5f84-xdwwz ● 1/1 Running 0 1 │

│ │

└─────────────────────────────────────────────────────────────────────────────────────────────────────────────────┘

3 - Solo CLI User Manual

Solo Command Line User Manual

Solo has a series of commands to use, and some commands have subcommands. User can get help information by running with the following methods:

solo --help will return the help information for the solo command to show which commands

are available.

solo command --help will return the help information for the specific command to show which options

solo account --help

Manage Hedera accounts in solo network

Commands:

account init Initialize system accounts with new keys

account create Creates a new account with a new key and stores the key in th

e Kubernetes secrets, if you supply no key one will be genera

ted for you, otherwise you may supply either a ECDSA or ED255

19 private key

account update Updates an existing account with the provided info, if you wa

nt to update the private key, you can supply either ECDSA or

ED25519 but not both

account get Gets the account info including the current amount of HBAR

Options:

--dev Enable developer mode [boolean]

--force-port-forward Force port forward to access the network services

[boolean]

-h, --help Show help [boolean]

-v, --version Show version number [boolean]

solo command subcommand --help will return the help information for the specific subcommand to show which options

solo account create --help

Creates a new account with a new key and stores the key in the Kubernetes secret

s, if you supply no key one will be generated for you, otherwise you may supply

either a ECDSA or ED25519 private key

Options:

--dev Enable developer mode [boolean]

--force-port-forward Force port forward to access the network services

[boolean]

--hbar-amount Amount of HBAR to add [number]

--create-amount Amount of new account to create [number]

--ecdsa-private-key ECDSA private key for the Hedera account [string]

-d, --deployment The name the user will reference locally to link to

a deployment [string]

--ed25519-private-key ED25519 private key for the Hedera account [string]

--generate-ecdsa-key Generate ECDSA private key for the Hedera account

[boolean]

--set-alias Sets the alias for the Hedera account when it is cr

eated, requires --ecdsa-private-key [boolean]

-c, --cluster-ref The cluster reference that will be used for referen

cing the Kubernetes cluster and stored in the local

and remote configuration for the deployment. For

commands that take multiple clusters they can be se

parated by commas. [string]

-h, --help Show help [boolean]

-v, --version Show version number [boolean]

For more information see: Solo CLI Commands

4 - Solo CLI Commands

Solo Command Reference

Table of Contents

Root Help Output

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js --help

Select a command

Usage:

solo <command> [options]

Commands:

init Initialize local environment

account Manage Hedera accounts in solo network

cluster-ref Manage solo testing cluster

network Manage solo network deployment

node Manage Hedera platform node in solo network

relay Manage JSON RPC relays in solo network

mirror-node Manage Hedera Mirror Node in solo network

explorer Manage Explorer in solo network

deployment Manage solo network deployment

block Manage block related components in solo network

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

init

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js init --help

init

Initialize local environment

Options:

--cache-dir Local cache directory [string] [default: "/Users/user/.solo/cache"]

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-u, --user Optional user name used for [string]

local configuration. Only

accepts letters and numbers.

Defaults to the username

provided by the OS

-v, --version Show version number [boolean]

account

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js account --help

Select an account command

account

Manage Hedera accounts in solo network

Commands:

account init Initialize system accounts with new keys

account create Creates a new account with a new key and stores the key in the Kubernetes secrets, if you supply no key one will be generated for you, otherwise you may supply either a ECDSA or ED25519 private key

account update Updates an existing account with the provided info, if you want to update the private key, you can supply either ECDSA or ED25519 but not both

account get Gets the account info including the current amount of HBAR

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

account init

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js account init --help

account init

Initialize system accounts with new keys

Options:

-c, --cluster-ref The cluster reference that [string]

will be used for referencing

the Kubernetes cluster and

stored in the local and remote

configuration for the

deployment. For commands that

take multiple clusters they

can be separated by commas.

-d, --deployment The name the user will [string]

reference locally to link to a

deployment

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-i, --node-aliases Comma separated node aliases [string]

(empty means all nodes)

-v, --version Show version number [boolean]

account create

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js account create --help

account create

Creates a new account with a new key and stores the key in the Kubernetes secrets, if you supply no key one will be generated for you, otherwise you may supply either a ECDSA or ED25519 private key

Options:

-c, --cluster-ref The cluster reference that [string]

will be used for referencing

the Kubernetes cluster and

stored in the local and remote

configuration for the

deployment. For commands that

take multiple clusters they

can be separated by commas.

--create-amount Amount of new account to [number] [default: 1]

create

-d, --deployment The name the user will [string]

reference locally to link to a

deployment

--dev Enable developer mode [boolean] [default: false]

--ecdsa-private-key ECDSA private key for the [string]

Hedera account

--ed25519-private-key ED25519 private key for the [string]

Hedera account

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

--generate-ecdsa-key Generate ECDSA private key for [boolean] [default: false]

the Hedera account

--hbar-amount Amount of HBAR to add [number] [default: 100]

--set-alias Sets the alias for the Hedera [boolean] [default: false]

account when it is created,

requires --ecdsa-private-key

-v, --version Show version number [boolean]

account update

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js account update --help

account update

Updates an existing account with the provided info, if you want to update the private key, you can supply either ECDSA or ED25519 but not both

Options:

--account-id The Hedera account id, e.g.: [string]

0.0.1001

-c, --cluster-ref The cluster reference that [string]

will be used for referencing

the Kubernetes cluster and

stored in the local and remote

configuration for the

deployment. For commands that

take multiple clusters they

can be separated by commas.

-d, --deployment The name the user will [string]

reference locally to link to a

deployment

--dev Enable developer mode [boolean] [default: false]

--ecdsa-private-key ECDSA private key for the [string]

Hedera account

--ed25519-private-key ED25519 private key for the [string]

Hedera account

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

--hbar-amount Amount of HBAR to add [number] [default: 100]

-v, --version Show version number [boolean]

account get

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js account get --help

account get

Gets the account info including the current amount of HBAR

Options:

--account-id The Hedera account id, e.g.: [string]

0.0.1001

-c, --cluster-ref The cluster reference that [string]

will be used for referencing

the Kubernetes cluster and

stored in the local and remote

configuration for the

deployment. For commands that

take multiple clusters they

can be separated by commas.

-d, --deployment The name the user will [string]

reference locally to link to a

deployment

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

--private-key Show private key information [boolean] [default: false]

-v, --version Show version number [boolean]

cluster-ref

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js cluster-ref --help

Select a context command

cluster-ref

Manage solo testing cluster

Commands:

cluster-ref connect associates a cluster reference to a k8s context

cluster-ref disconnect dissociates a cluster reference from a k8s context

cluster-ref list List all available clusters

cluster-ref info Get cluster info

cluster-ref setup Setup cluster with shared components

cluster-ref reset Uninstall shared components from cluster

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

cluster-ref connect

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js cluster-ref connect --help

Missing required argument: cluster-ref

cluster-ref connect

associates a cluster reference to a k8s context

Options:

-c, --cluster-ref The cluster reference that [string] [required]

will be used for referencing

the Kubernetes cluster and

stored in the local and remote

configuration for the

deployment. For commands that

take multiple clusters they

can be separated by commas.

--context The Kubernetes context name to [string]

be used

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-v, --version Show version number [boolean]

cluster-ref disconnect

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js cluster-ref disconnect --help

Missing required argument: cluster-ref

cluster-ref disconnect

dissociates a cluster reference from a k8s context

Options:

-c, --cluster-ref The cluster reference that [string] [required]

will be used for referencing

the Kubernetes cluster and

stored in the local and remote

configuration for the

deployment. For commands that

take multiple clusters they

can be separated by commas.

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-v, --version Show version number [boolean]

cluster-ref list

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js cluster-ref list --help

cluster-ref list

List all available clusters

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-v, --version Show version number [boolean]

cluster-ref info

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js cluster-ref info --help

Missing required argument: cluster-ref

cluster-ref info

Get cluster info

Options:

-c, --cluster-ref The cluster reference that [string] [required]

will be used for referencing

the Kubernetes cluster and

stored in the local and remote

configuration for the

deployment. For commands that

take multiple clusters they

can be separated by commas.

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-v, --version Show version number [boolean]

cluster-ref setup

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js cluster-ref setup --help

cluster-ref setup

Setup cluster with shared components

Options:

--chart-dir Local chart directory path [string]

(e.g. ~/solo-charts/charts

-c, --cluster-ref The cluster reference that [string]

will be used for referencing

the Kubernetes cluster and

stored in the local and remote

configuration for the

deployment. For commands that

take multiple clusters they

can be separated by commas.

-s, --cluster-setup-namespace Cluster Setup Namespace [string] [default: "solo-setup"]

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

--minio Deploy minio operator [boolean] [default: true]

--prometheus-stack Deploy prometheus stack [boolean] [default: false]

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

--solo-chart-version Solo testing chart version [string] [default: "0.53.0"]

-v, --version Show version number [boolean]

cluster-ref reset

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js cluster-ref reset --help

cluster-ref reset

Uninstall shared components from cluster

Options:

-c, --cluster-ref The cluster reference that [string]

will be used for referencing

the Kubernetes cluster and

stored in the local and remote

configuration for the

deployment. For commands that

take multiple clusters they

can be separated by commas.

-s, --cluster-setup-namespace Cluster Setup Namespace [string] [default: "solo-setup"]

--dev Enable developer mode [boolean] [default: false]

-f, --force Force actions even if those [boolean] [default: false]

can be skipped

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-v, --version Show version number [boolean]

network

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js network --help

Select a chart command

network

Manage solo network deployment

Commands:

network deploy Deploy solo network. Requires the chart `solo-cluster-setup` to have been installed in the cluster. If it hasn't the following command can be ran: `solo cluster-ref setup`

network destroy Destroy solo network. If both --delete-pvcs and --delete-secrets are set to true, the namespace will be deleted.

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

network deploy

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js network deploy --help

network deploy

Deploy solo network. Requires the chart `solo-cluster-setup` to have been installed in the cluster. If it hasn't the following command can be ran: `solo cluster-ref setup`

Options:

--api-permission-properties api-permission.properties file [string] [default: "templates/api-permission.properties"]

for node

--app Testing app name [string] [default: "HederaNode.jar"]

--application-env the application.env file for [string] [default: "templates/application.env"]

the node provides environment

variables to the

solo-container to be used when

the hedera platform is started

--application-properties application.properties file [string] [default: "templates/application.properties"]

for node

--aws-bucket name of aws storage bucket [string]

--aws-bucket-prefix path prefix of aws storage [string]

bucket

--aws-endpoint aws storage endpoint URL [string]

--aws-write-access-key aws storage access key for [string]

write access

--aws-write-secrets aws storage secret key for [string]

write access

--backup-bucket name of bucket for backing up [string]

state files

--backup-endpoint backup storage endpoint URL [string]

--backup-provider backup storage service [string] [default: "GCS"]

provider, GCS or AWS

--backup-region backup storage region [string] [default: "us-central1"]

--backup-write-access-key backup storage access key for [string]

write access

--backup-write-secrets backup storage secret key for [string]

write access

--bootstrap-properties bootstrap.properties file for [string] [default: "templates/bootstrap.properties"]

node

--cache-dir Local cache directory [string] [default: "/Users/user/.solo/cache"]

--chart-dir Local chart directory path [string]

(e.g. ~/solo-charts/charts

--debug-node-alias Enable default jvm debug port [string]

(5005) for the given node id

-d, --deployment The name the user will [string]

reference locally to link to a

deployment

--dev Enable developer mode [boolean] [default: false]

--domain-names Custom domain names for [string]

consensus nodes mapping for

the(e.g. node0=domain.name

where key is node alias and

value is domain name)with

multiple nodes comma seperated

--envoy-ips IP mapping where key = value [string]

is node alias and static ip

for envoy proxy, (e.g.:

--envoy-ips

node1=127.0.0.1,node2=127.0.0.1)

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

--gcs-bucket name of gcs storage bucket [string]

--gcs-bucket-prefix path prefix of google storage [string]

bucket

--gcs-endpoint gcs storage endpoint URL [string]

--gcs-write-access-key gcs storage access key for [string]

write access

--gcs-write-secrets gcs storage secret key for [string]

write access

--genesis-throttles-file throttles.json file used [string]

during network genesis

--grpc-tls-cert TLS Certificate path for the [string]

gRPC (e.g.

"node1=/Users/username/node1-grpc.cert" with multiple nodes comma separated)

--grpc-tls-key TLS Certificate key path for [string]

the gRPC (e.g.

"node1=/Users/username/node1-grpc.key" with multiple nodes comma seperated)

--grpc-web-tls-cert TLS Certificate path for gRPC [string]

Web (e.g.

"node1=/Users/username/node1-grpc-web.cert" with multiple nodes comma separated)

--grpc-web-tls-key TLC Certificate key path for [string]

gRPC Web (e.g.

"node1=/Users/username/node1-grpc-web.key" with multiple nodes comma seperated)

--haproxy-ips IP mapping where key = value [string]

is node alias and static ip

for haproxy, (e.g.:

--haproxy-ips

node1=127.0.0.1,node2=127.0.0.1)

-l, --ledger-id Ledger ID (a.k.a. Chain ID) [string] [default: "298"]

--load-balancer Enable load balancer for [boolean] [default: false]

network node proxies

--log4j2-xml log4j2.xml file for node [string] [default: "templates/log4j2.xml"]

-i, --node-aliases Comma separated node aliases [string]

(empty means all nodes)

--profile Resource profile (local | tiny [string] [default: "local"]

| small | medium | large)

--profile-file Resource profile definition [string] [default: "profiles/custom-spec.yaml"]

(e.g. custom-spec.yaml)

--prometheus-svc-monitor Enable prometheus service [boolean] [default: false]

monitor for the network nodes

--pvcs Enable persistent volume [boolean] [default: false]

claims to store data outside

the pod, required for node add

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-t, --release-tag Release tag to be used (e.g. [string] [default: "v0.60.1"]

v0.60.1)

--settings-txt settings.txt file for node [string] [default: "templates/settings.txt"]

--solo-chart-version Solo testing chart version [string] [default: "0.53.0"]

--storage-type storage type for saving stream [default: "minio_only"]

files, available options are

minio_only, aws_only,

gcs_only, aws_and_gcs

-f, --values-file Comma separated chart values [string]

file paths for each cluster

(e.g.

values.yaml,cluster-1=./a/b/values1.yaml,cluster-2=./a/b/values2.yaml)

-v, --version Show version number [boolean]

network destroy

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js network destroy --help

network destroy

Destroy solo network. If both --delete-pvcs and --delete-secrets are set to true, the namespace will be deleted.

Options:

--delete-pvcs Delete the persistent volume [boolean] [default: false]

claims. If both --delete-pvcs

and --delete-secrets are

set to true, the namespace

will be deleted.

--delete-secrets Delete the network secrets. If [boolean] [default: false]

both --delete-pvcs and

--delete-secrets are set to

true, the namespace will be

deleted.

-d, --deployment The name the user will [string]

reference locally to link to a

deployment

--dev Enable developer mode [boolean] [default: false]

--enable-timeout enable time out for running a [boolean] [default: false]

command

-f, --force Force actions even if those [boolean] [default: false]

can be skipped

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-v, --version Show version number [boolean]

node

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node --help

Select a node command

node

Manage Hedera platform node in solo network

Commands:

node setup Setup node with a specific version of Hedera platform

node start Start a node

node stop Stop a node

node freeze Freeze all nodes of the network

node restart Restart all nodes of the network

node keys Generate node keys

node refresh Reset and restart a node

node logs Download application logs from the network nodes and stores them in <SOLO_LOGS_DIR>/<namespace>/<podName>/ directory

node states Download hedera states from the network nodes and stores them in <SOLO_LOGS_DIR>/<namespace>/<podName>/ directory

node add Adds a node with a specific version of Hedera platform

node add-prepare Prepares the addition of a node with a specific version of Hedera platform

node add-submit-transactions Submits NodeCreateTransaction and Upgrade transactions to the network nodes

node add-execute Executes the addition of a previously prepared node

node update Update a node with a specific version of Hedera platform

node update-prepare Prepare the deployment to update a node with a specific version of Hedera platform

node update-submit-transactions Submit transactions for updating a node with a specific version of Hedera platform

node update-execute Executes the updating of a node with a specific version of Hedera platform

node delete Delete a node with a specific version of Hedera platform

node delete-prepare Prepares the deletion of a node with a specific version of Hedera platform

node delete-submit-transactions Submits transactions to the network nodes for deleting a node

node delete-execute Executes the deletion of a previously prepared node

node prepare-upgrade Prepare the network for a Freeze Upgrade operation

node freeze-upgrade Performs a Freeze Upgrade operation with on the network after it has been prepared with prepare-upgrade

node upgrade upgrades all nodes on the network

node upgrade-prepare Prepare the deployment to upgrade network

node upgrade-submit-transactions Submit transactions for upgrading network

node upgrade-execute Executes the upgrading the network

node download-generated-files Downloads the generated files from an existing node

Options:

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-v, --version Show version number [boolean]

node setup

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node setup --help

Missing required argument: deployment

node setup

Setup node with a specific version of Hedera platform

Options:

-d, --deployment The name the user will [string] [required]

reference locally to link to a

deployment

--admin-public-keys Comma separated list of DER [string]

encoded ED25519 public keys

and must match the order of

the node aliases

--app Testing app name [string] [default: "HederaNode.jar"]

--app-config json config file of testing [string]

app

--cache-dir Local cache directory [string] [default: "/Users/user/.solo/cache"]

--dev Enable developer mode [boolean] [default: false]

--domain-names Custom domain names for [string]

consensus nodes mapping for

the(e.g. node0=domain.name

where key is node alias and

value is domain name)with

multiple nodes comma seperated

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

--local-build-path path of hedera local repo [string]

-i, --node-aliases Comma separated node aliases [string]

(empty means all nodes)

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-t, --release-tag Release tag to be used (e.g. [string] [default: "v0.60.1"]

v0.60.1)

-v, --version Show version number [boolean]

node start

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node start --help

Missing required argument: deployment

node start

Start a node

Options:

-d, --deployment The name the user will [string] [required]

reference locally to link to a

deployment

--app Testing app name [string] [default: "HederaNode.jar"]

--debug-node-alias Enable default jvm debug port [string]

(5005) for the given node id

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-i, --node-aliases Comma separated node aliases [string]

(empty means all nodes)

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-t, --release-tag Release tag to be used (e.g. [string] [default: "v0.60.1"]

v0.60.1)

--stake-amounts The amount to be staked in the [string]

same order you list the node

aliases with multiple node

staked values comma seperated

--state-file A zipped state file to be used [string]

for the network

-v, --version Show version number [boolean]

node stop

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node stop --help

Missing required argument: deployment

node stop

Stop a node

Options:

-d, --deployment The name the user will [string] [required]

reference locally to link to a

deployment

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-i, --node-aliases Comma separated node aliases [string]

(empty means all nodes)

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-v, --version Show version number [boolean]

node freeze

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node freeze --help

Missing required argument: deployment

node freeze

Freeze all nodes of the network

Options:

-d, --deployment The name the user will [string] [required]

reference locally to link to a

deployment

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-v, --version Show version number [boolean]

node restart

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node restart --help

Missing required argument: deployment

node restart

Restart all nodes of the network

Options:

-d, --deployment The name the user will [string] [required]

reference locally to link to a

deployment

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-v, --version Show version number [boolean]

node keys

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node keys --help

Missing required argument: deployment

node keys

Generate node keys

Options:

-d, --deployment The name the user will [string] [required]

reference locally to link to a

deployment

--cache-dir Local cache directory [string] [default: "/Users/user/.solo/cache"]

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

--gossip-keys Generate gossip keys for nodes [boolean] [default: false]

-n, --namespace Namespace [string]

-i, --node-aliases Comma separated node aliases [string]

(empty means all nodes)

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

--tls-keys Generate gRPC TLS keys for [boolean] [default: false]

nodes

-v, --version Show version number [boolean]

node refresh

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node refresh --help

Missing required argument: deployment

node refresh

Reset and restart a node

Options:

-d, --deployment The name the user will [string] [required]

reference locally to link to a

deployment

--app Testing app name [string] [default: "HederaNode.jar"]

--cache-dir Local cache directory [string] [default: "/Users/user/.solo/cache"]

--dev Enable developer mode [boolean] [default: false]

--domain-names Custom domain names for [string]

consensus nodes mapping for

the(e.g. node0=domain.name

where key is node alias and

value is domain name)with

multiple nodes comma seperated

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

--local-build-path path of hedera local repo [string]

-i, --node-aliases Comma separated node aliases [string]

(empty means all nodes)

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-t, --release-tag Release tag to be used (e.g. [string] [default: "v0.60.1"]

v0.60.1)

-v, --version Show version number [boolean]

node logs

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node logs --help

Missing required arguments: deployment, node-aliases

node logs

Download application logs from the network nodes and stores them in <SOLO_LOGS_DIR>/<namespace>/<podName>/ directory

Options:

-d, --deployment The name the user will [string] [required]

reference locally to link to a

deployment

-i, --node-aliases Comma separated node aliases [string] [required]

(empty means all nodes)

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-v, --version Show version number [boolean]

node states

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node states --help

Missing required arguments: deployment, node-aliases

node states

Download hedera states from the network nodes and stores them in <SOLO_LOGS_DIR>/<namespace>/<podName>/ directory

Options:

-d, --deployment The name the user will [string] [required]

reference locally to link to a

deployment

-i, --node-aliases Comma separated node aliases [string] [required]

(empty means all nodes)

--dev Enable developer mode [boolean] [default: false]

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-v, --version Show version number [boolean]

node add

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node add --help

Missing required argument: deployment

node add

Adds a node with a specific version of Hedera platform

Options:

-d, --deployment The name the user will [string] [required]

reference locally to link to a

deployment

--admin-key Admin key [string] [default: "***"]

--app Testing app name [string] [default: "HederaNode.jar"]

--cache-dir Local cache directory [string] [default: "/Users/user/.solo/cache"]

--chart-dir Local chart directory path [string]

(e.g. ~/solo-charts/charts

-c, --cluster-ref The cluster reference that [string]

will be used for referencing

the Kubernetes cluster and

stored in the local and remote

configuration for the

deployment. For commands that

take multiple clusters they

can be separated by commas.

--debug-node-alias Enable default jvm debug port [string]

(5005) for the given node id

--dev Enable developer mode [boolean] [default: false]

--domain-names Custom domain names for [string]

consensus nodes mapping for

the(e.g. node0=domain.name

where key is node alias and

value is domain name)with

multiple nodes comma seperated

--endpoint-type Endpoint type (IP or FQDN) [string] [default: "FQDN"]

--envoy-ips IP mapping where key = value [string]

is node alias and static ip

for envoy proxy, (e.g.:

--envoy-ips

node1=127.0.0.1,node2=127.0.0.1)

-f, --force Force actions even if those [boolean] [default: false]

can be skipped

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

--gossip-endpoints Comma separated gossip [string]

endpoints of the node(e.g.

first one is internal, second

one is external)

--gossip-keys Generate gossip keys for nodes [boolean] [default: false]

--grpc-endpoints Comma separated gRPC endpoints [string]

of the node (at most 8)

--grpc-tls-cert TLS Certificate path for the [string]

gRPC (e.g.

"node1=/Users/username/node1-grpc.cert" with multiple nodes comma separated)

--grpc-tls-key TLS Certificate key path for [string]

the gRPC (e.g.

"node1=/Users/username/node1-grpc.key" with multiple nodes comma seperated)

--grpc-web-tls-cert TLS Certificate path for gRPC [string]

Web (e.g.

"node1=/Users/username/node1-grpc-web.cert" with multiple nodes comma separated)

--grpc-web-tls-key TLC Certificate key path for [string]

gRPC Web (e.g.

"node1=/Users/username/node1-grpc-web.key" with multiple nodes comma seperated)

--haproxy-ips IP mapping where key = value [string]

is node alias and static ip

for haproxy, (e.g.:

--haproxy-ips

node1=127.0.0.1,node2=127.0.0.1)

-l, --ledger-id Ledger ID (a.k.a. Chain ID) [string] [default: "298"]

--local-build-path path of hedera local repo [string]

--pvcs Enable persistent volume [boolean] [default: false]

claims to store data outside

the pod, required for node add

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-t, --release-tag Release tag to be used (e.g. [string] [default: "v0.60.1"]

v0.60.1)

--solo-chart-version Solo testing chart version [string] [default: "0.53.0"]

--tls-keys Generate gRPC TLS keys for [boolean] [default: false]

nodes

-v, --version Show version number [boolean]

node add-prepare

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node add-prepare --help

Missing required arguments: deployment, output-dir

node add-prepare

Prepares the addition of a node with a specific version of Hedera platform

Options:

-d, --deployment The name the user will [string] [required]

reference locally to link to a

deployment

--output-dir Path to the directory where [string] [required]

the command context will be

saved to

--admin-key Admin key [string] [default: "***"]

--app Testing app name [string] [default: "HederaNode.jar"]

--cache-dir Local cache directory [string] [default: "/Users/user/.solo/cache"]

--chart-dir Local chart directory path [string]

(e.g. ~/solo-charts/charts

-c, --cluster-ref The cluster reference that [string]

will be used for referencing

the Kubernetes cluster and

stored in the local and remote

configuration for the

deployment. For commands that

take multiple clusters they

can be separated by commas.

--debug-node-alias Enable default jvm debug port [string]

(5005) for the given node id

--dev Enable developer mode [boolean] [default: false]

--domain-names Custom domain names for [string]

consensus nodes mapping for

the(e.g. node0=domain.name

where key is node alias and

value is domain name)with

multiple nodes comma seperated

--endpoint-type Endpoint type (IP or FQDN) [string] [default: "FQDN"]

-f, --force Force actions even if those [boolean] [default: false]

can be skipped

--force-port-forward Force port forward to access [boolean] [default: true]

the network services

--gossip-endpoints Comma separated gossip [string]

endpoints of the node(e.g.

first one is internal, second

one is external)

--gossip-keys Generate gossip keys for nodes [boolean] [default: false]

--grpc-endpoints Comma separated gRPC endpoints [string]

of the node (at most 8)

--grpc-tls-cert TLS Certificate path for the [string]

gRPC (e.g.

"node1=/Users/username/node1-grpc.cert" with multiple nodes comma separated)

--grpc-tls-key TLS Certificate key path for [string]

the gRPC (e.g.

"node1=/Users/username/node1-grpc.key" with multiple nodes comma seperated)

--grpc-web-tls-cert TLS Certificate path for gRPC [string]

Web (e.g.

"node1=/Users/username/node1-grpc-web.cert" with multiple nodes comma separated)

--grpc-web-tls-key TLC Certificate key path for [string]

gRPC Web (e.g.

"node1=/Users/username/node1-grpc-web.key" with multiple nodes comma seperated)

-l, --ledger-id Ledger ID (a.k.a. Chain ID) [string] [default: "298"]

--local-build-path path of hedera local repo [string]

--pvcs Enable persistent volume [boolean] [default: false]

claims to store data outside

the pod, required for node add

-q, --quiet-mode Quiet mode, do not prompt for [boolean] [default: false]

confirmation

-t, --release-tag Release tag to be used (e.g. [string] [default: "v0.60.1"]

v0.60.1)

--solo-chart-version Solo testing chart version [string] [default: "0.53.0"]

--tls-keys Generate gRPC TLS keys for [boolean] [default: false]

nodes

-v, --version Show version number [boolean]

node add-submit-transactions

> @hashgraph/solo@0.36.1 solo

> node --no-deprecation --no-warnings dist/solo.js node add-submit-transactions --help

Missing required arguments: deployment, input-dir

node add-submit-transactions

Submits NodeCreateTransaction and Upgrade transactions to the network nodes

Options:

-d, --deployment The name the user will [string] [required]

reference locally to link to a

deployment

--input-dir Path to the directory where [string] [required]

the command context will be

loaded from

--app Testing app name [string] [default: "HederaNode.jar"]

--cache-dir Local cache directory [string] [default: "/Users/user/.solo/cache"]

--chart-dir Local chart directory path [string]

(e.g. ~/solo-charts/charts

-c, --cluster-ref The cluster reference that [string]

will be used for referencing

the Kubernetes cluster and

stored in the local and remote